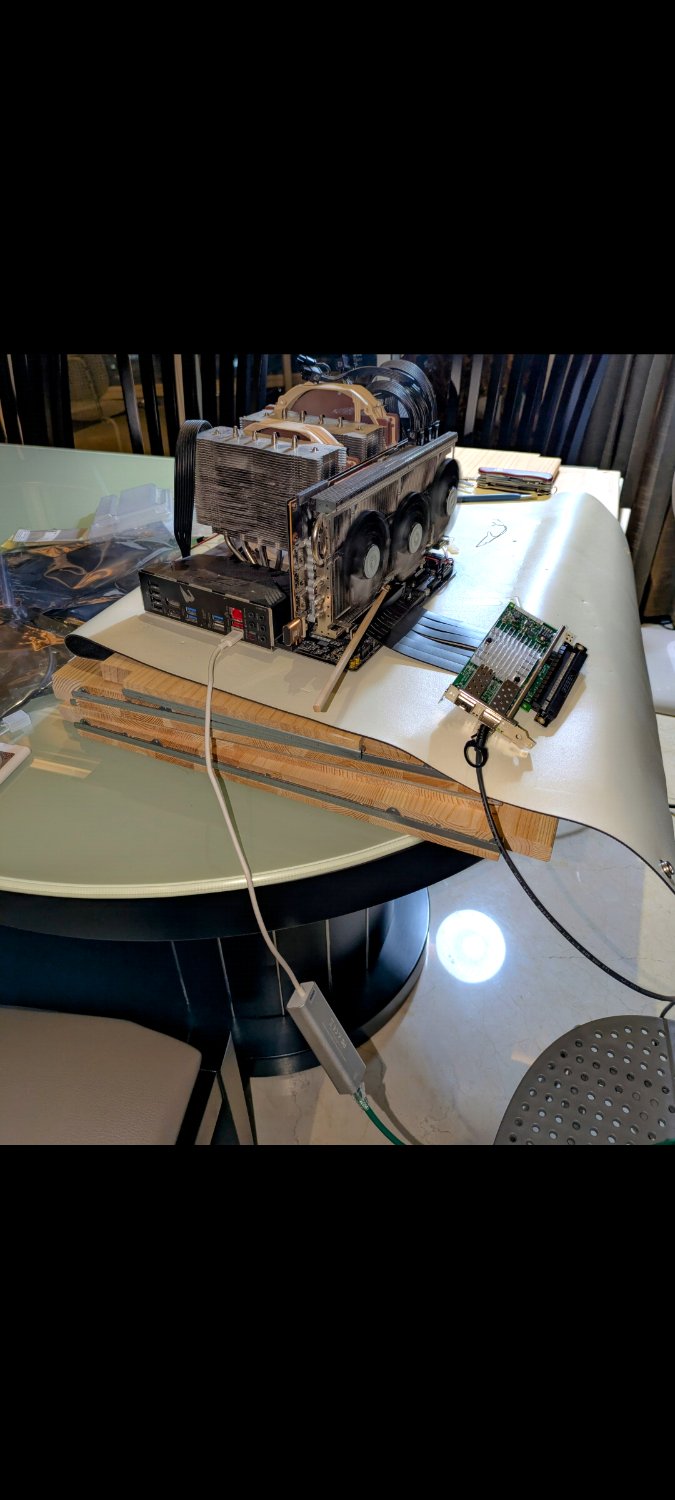

My testing setup is, all on the same subnet, ipv4

- Windows Machine with Intel x520

-

- Direct Connect 10Gbps cable

- USW Aggregation switch (10Gbps)

-

- Direct Connect 10Gbps cable

- Synology NAS with Intel x520

-

- Debian VM

iperf3 Windows To Debian: 6Gbits/sec

.\iperf3.exe -c 192.168.11.57 --get-server-output

Connecting to host 192.168.11.57, port 5201

[ 5] local 192.168.11.132 port 56855 connected to 192.168.11.57 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.01 sec 817 MBytes 6.79 Gbits/sec

[ 5] 1.01-2.00 sec 806 MBytes 6.82 Gbits/sec

[ 5] 2.00-3.01 sec 822 MBytes 6.85 Gbits/sec

[ 5] 3.01-4.00 sec 805 MBytes 6.81 Gbits/sec

[ 5] 4.00-5.01 sec 818 MBytes 6.81 Gbits/sec

[ 5] 5.01-6.00 sec 806 MBytes 6.82 Gbits/sec

[ 5] 6.00-7.01 sec 821 MBytes 6.83 Gbits/sec

[ 5] 7.01-8.00 sec 805 MBytes 6.80 Gbits/sec

[ 5] 8.00-9.01 sec 820 MBytes 6.82 Gbits/sec

[ 5] 9.01-10.00 sec 809 MBytes 6.84 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.00 sec 7.94 GBytes 6.82 Gbits/sec sender

[ 5] 0.00-10.00 sec 7.94 GBytes 6.82 Gbits/sec receiver

Server output:

-----------------------------------------------------------

Server listening on 5201 (test #9)

-----------------------------------------------------------

Accepted connection from 192.168.11.132, port 56854

[ 5] local 192.168.11.57 port 5201 connected to 192.168.11.132 port 56855

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 806 MBytes 6.76 Gbits/sec

[ 5] 1.00-2.00 sec 812 MBytes 6.82 Gbits/sec

[ 5] 2.00-3.00 sec 816 MBytes 6.85 Gbits/sec

[ 5] 3.00-4.00 sec 812 MBytes 6.81 Gbits/sec

[ 5] 4.00-5.00 sec 812 MBytes 6.81 Gbits/sec

[ 5] 5.00-6.00 sec 812 MBytes 6.81 Gbits/sec

[ 5] 6.00-7.00 sec 815 MBytes 6.84 Gbits/sec

[ 5] 7.00-8.00 sec 811 MBytes 6.80 Gbits/sec

[ 5] 8.00-9.00 sec 814 MBytes 6.82 Gbits/sec

[ 5] 9.00-10.00 sec 815 MBytes 6.84 Gbits/sec

[ 5] 10.00-10.00 sec 1.12 MBytes 4.81 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.00 sec 7.94 GBytes 6.82 Gbits/sec receiver

iperf Done.

iperf3 debian to windows 9.5Gbits/sec

.\iperf3.exe -c 192.168.11.57 --get-server-output -R

Connecting to host 192.168.11.57, port 5201

Reverse mode, remote host 192.168.11.57 is sending

[ 5] local 192.168.11.132 port 56845 connected to 192.168.11.57 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.01 sec 1.11 GBytes 9.40 Gbits/sec

[ 5] 1.01-2.00 sec 1.09 GBytes 9.47 Gbits/sec

[ 5] 2.00-3.01 sec 1.11 GBytes 9.47 Gbits/sec

[ 5] 3.01-4.00 sec 1.09 GBytes 9.47 Gbits/sec

[ 5] 4.00-5.01 sec 1.11 GBytes 9.47 Gbits/sec

[ 5] 5.01-6.00 sec 1.09 GBytes 9.47 Gbits/sec

[ 5] 6.00-7.01 sec 1.11 GBytes 9.47 Gbits/sec

[ 5] 7.01-8.00 sec 1.09 GBytes 9.47 Gbits/sec

[ 5] 8.00-9.01 sec 1.11 GBytes 9.47 Gbits/sec

[ 5] 9.01-10.00 sec 1.09 GBytes 9.47 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 11.0 GBytes 9.46 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 11.0 GBytes 9.46 Gbits/sec receiver

Server output:

-----------------------------------------------------------

Server listening on 5201 (test #7)

-----------------------------------------------------------

Accepted connection from 192.168.11.132, port 56844

[ 5] local 192.168.11.57 port 5201 connected to 192.168.11.132 port 56845

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.10 GBytes 9.42 Gbits/sec 0 2.01 MBytes

[ 5] 1.00-2.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 2.00-3.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 3.00-4.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 4.00-5.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 5.00-6.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 6.00-7.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 7.00-8.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 8.00-9.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 9.00-10.00 sec 1.10 GBytes 9.47 Gbits/sec 0 2.01 MBytes

[ 5] 10.00-10.00 sec 2.50 MBytes 7.72 Gbits/sec 0 2.01 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 11.0 GBytes 9.46 Gbits/sec 0 sender

iperf Done.

I find that rather curious, something in the windows 10 tcp settings that limit the outgoing throughput, window size maybe?

Debian MTU

ip link show ens3 | grep -i "mtu"

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

::: spoiler Windows MTU

netsh interface ipv4 show subinterfaces

MTU MediaSenseState Bytes In Bytes Out Interface

------ --------------- --------- --------- -------------

1500 1 273580016987 64376522487 Ethernet 4