this post was submitted on 03 Sep 2024

706 points (94.2% liked)

196

17053 readers

1127 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

Other rules

Behavior rules:

- No bigotry (transphobia, racism, etc…)

- No genocide denial

- No support for authoritarian behaviour (incl. Tankies)

- No namecalling

- Accounts from lemmygrad.ml, threads.net, or hexbear.net are held to higher standards

- Other things seen as cleary bad

Posting rules:

- No AI generated content (DALL-E etc…)

- No advertisements

- No gore / violence

- Mutual aid posts require verification from the mods first

NSFW: NSFW content is permitted but it must be tagged and have content warnings. Anything that doesn't adhere to this will be removed. Content warnings should be added like: [penis], [explicit description of sex]. Non-sexualized breasts of any gender are not considered inappropriate and therefore do not need to be blurred/tagged.

If you have any questions, feel free to contact us on our matrix channel or email.

Other 196's:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

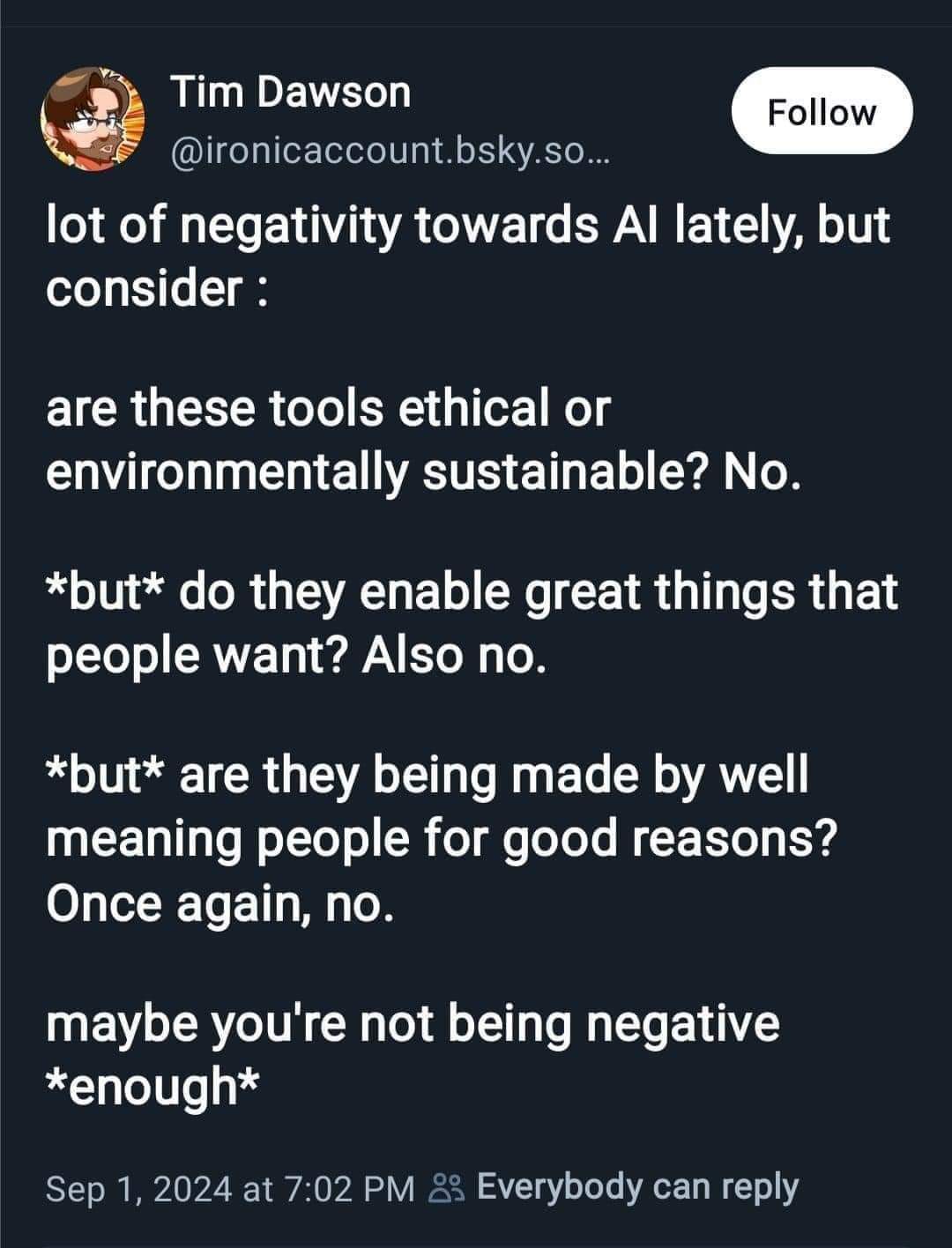

Right here is the problem, because many companies like Adobe and Microsoft have made obtrusive AI that I would really like to not interact with, but don't have a choice at my job. I'd really like to not have to deal with AI chatbots when I need support, or find AI written articles when I'm looking for a how-to guide, but companies do not offer that as an option.

Capitalists foam at the mouth imagining how they can replace workers, when the reality is that they're creating worse products that are less profitable by not understanding the fundamental limitations of the technology. Hallucinations will always exist because they happen in the biology they're based on. The model generates bullshit that doesn't exist in the same way that our memory generates bullshit that didn't happen. It's filling in the blanks of what it thinks should go there; a system of educated guesswork that just tries to look convincing, not be correct.