this post was submitted on 05 Feb 2025

266 points (98.9% liked)

196

17278 readers

2014 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

Other rules

Behavior rules:

- No bigotry (transphobia, racism, etc…)

- No genocide denial

- No support for authoritarian behaviour (incl. Tankies)

- No namecalling

- Accounts from lemmygrad.ml, threads.net, or hexbear.net are held to higher standards

- Other things seen as cleary bad

Posting rules:

- No AI generated content (DALL-E etc…)

- No advertisements

- No gore / violence

- Mutual aid posts require verification from the mods first

NSFW: NSFW content is permitted but it must be tagged and have content warnings. Anything that doesn't adhere to this will be removed. Content warnings should be added like: [penis], [explicit description of sex]. Non-sexualized breasts of any gender are not considered inappropriate and therefore do not need to be blurred/tagged.

If you have any questions, feel free to contact us on our matrix channel or email.

Other 196's:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

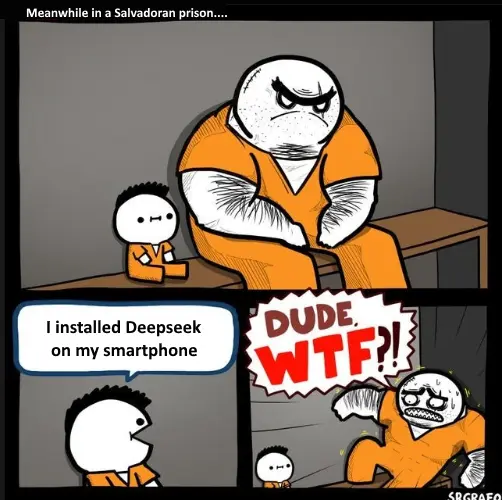

apparently not. it seems they are refering to the

official bs deepseek uifor ur phone. running it on your phone fr is super cool! Imma try that out now - with the smol 1.5B modelGood luck ! You’ll need it to run it without a GPU…

i kno! i'm already running a smol llama model on the phone, and yeaaaa that's a 2 token per second speed and it makes the phone lag like crazy... but it works!

currently i'm doing this with termux and ollama, but if there's some better foss way to run it, i'd be totally happy to use that instead <3

i think

termuxis probably already the best way to go, it ensures linux-like flexibility, i guess. but yeah, properly wiring it up, with a nice Graphical User Interface, would be nice, i guess.Edit: now that i think about it, i guess running it on some server that you rent, is maybe better, because then you can access that chat log from everywhere, and also, it doesn't drain your battery so much. But then, you need to rent a server, so, idk.

Edit again: Actually, somebody should hook up the DeepSeek chatbot to Matrix chat, so you can message it directly through your favorite messaging protocol/app.

edit again: (one hour later) i tried setting up deepseek 8b model on my rented server, but it doesn't have enough RAM. i tried adding swap space, but it doesn't let me. I figured out that you can't easily add swap space in a container. somehow, there seems to be a reason to that. too tired to explore further. whatever.

big sad :(

wish it would be nice and easi to do stuff like this - yea hosting it somewhere is probably best for ur moni and phone.

actually i think it kinda is nice and easy to do, i'm just too lazy/cheap to rent a server with 8GB of RAM, even though it would only cost $15/month or sth.

it would also be super slow, u usually want a GPU for LLM inference.. but u already know this, u are Gandald der zwölfte after all <3

woag you speak german (gasp)

where are you from?

eh, Rheinland Pfalz, near Mainz. how bout u? <3

o.O du verarscht mich jetzt oder? noch so'n cutie das aus deutschland stammt. Ich wohne in Wien ;-)

heyyyyyy... maybe don use this aggressive word... maybemaybs... - i jus don like it for som reason...

also, nuuuuu don just say ~cutie~ like that!!! >~< That's like - im used to reading it in English but not in ~german...~

also also, who else did u find here who u would call ~that~ ~word~ an who is also from the grrrmn land?

"silberzunge" miss brainfart z.b.

bist du nicht eh in mia's gruppenchat? dann kennst du sie ja

jaja ich kenne miss brainfart, ich erinnere mich noch... als sie auf lemmy war...

das waren Zeiten <3