Hackaday

Fresh hacks every day

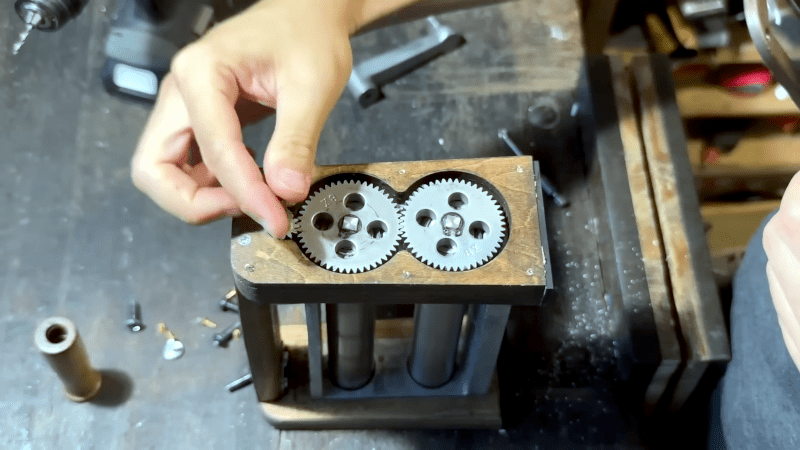

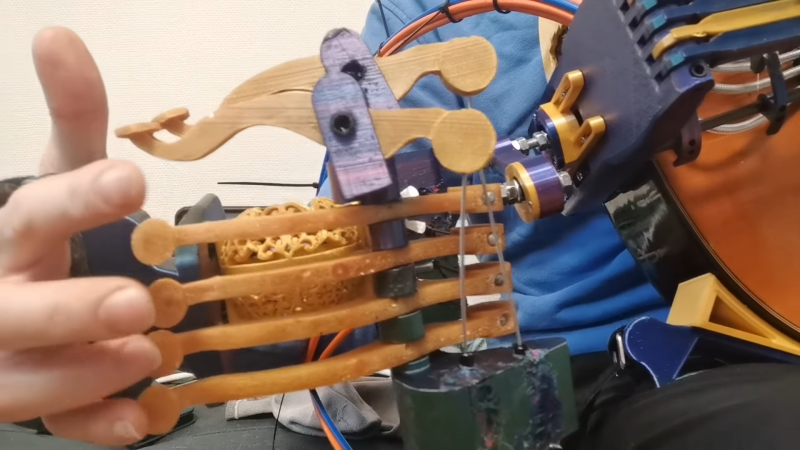

[Kevin Cheung] likes to upcycle old soda cans into — well — things. The metal is thin enough to cut by hand, but he’d started using a manual die-cutting machine, and it worked well. The problem? The machine was big and heavy, weighing well over 30 pounds. The solution was to get a lightweight die cutter. It worked better than expected, but [Kevin] really wanted it to be more portable, so he stripped it down and built the mechanism into a new case.

The video below isn’t quite a “how-to” video, but if you like watching someone handcraft something with a lot of skill, you’ll enjoy it. It also might give you ideas about how you could use one of these cutters, even if you don’t bother building a nice case for it.

We’ve seen cutters that use computer control, but they aren’t inexpensive. They will, however, make the same kind of cuts. But these manual die cutters are very inexpensive, and you simply have to find a way to make the die. You can easily make them for cutting paper, and, with the right materials, you can make the kind you see in [Kevin]’s video, too.

We have to admit, carrying the gizmo into a public place seemed to make a lot of people happy. So maybe portability is a good goal. But either way, you can have some fun with a machine like that.

If you want to cut paper, these work great. If you want paper to make the cuts, we have just the thing for you.

From Blog – Hackaday via this RSS feed

Display technology has come a long way since the advent of the CRT in the late 1800s (yes, really!). Since then, we’ve enjoyed the Nixie tubes, flip dots, gas plasma, LCD, LED, ePaper, the list goes on. Now, there’s a new kid on the block — water.

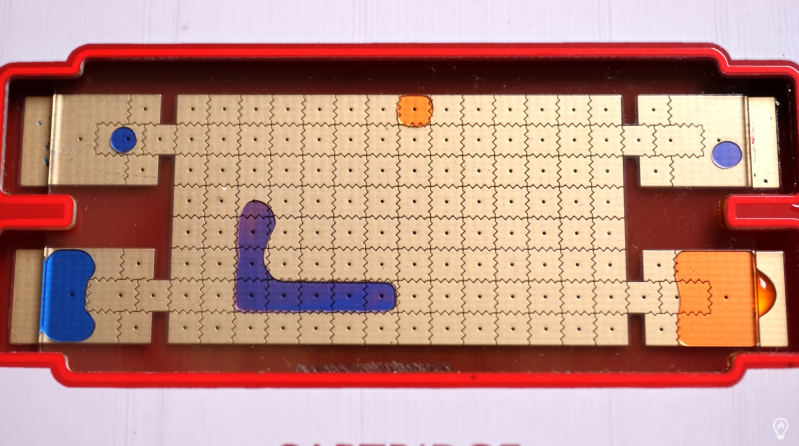

[Steve Mould] recently got his hands on an OpenDrop — an open-source digital microfluidics platform for biology research. It’s essentially a grid of electrodes coated in a dielectric. Water sits atop this insulating layer, and due to its polarized nature, droplets can be moved around the grid by voltages applied to the electrodes. The original intent was to automate experiments (see 8:19 in the video below for some wild examples), but [Steve] had far more important uses in mind.

When [Steve]’s €1,000 device shipped from Switzerland, it was destined for greatness. It was turned into a game console for classics such as Pac-Man, Frogger, and of course, Snake. With help from the OpenDrop’s inventor (and Copilot), he built paired-down versions of the games that could run on the 8×14 “pixel” grid. Pac-Man in particular proved difficult, because due to the conservation of mass, whenever Pac-Man ate a ghost, he grew and eventually became unwieldy. Fortunately, Snake is one of the few videogames that actually respects the laws of classical mechanics, as the snake grows by one unit each time it consumes food.

[Steve] has also issued a challenge — if you code up another game, he’ll run it on his OpenDrop. He’s even offering a prize for the first working Tetris implementation, so be sure to check out his source code linked in the video description as a starting point. We’ve seen Tetris on oscilloscopes and 3D LED matrices before, so it’s about time we get a watery implementation.

Thanks to [deʃhipu] for the tip!

From Blog – Hackaday via this RSS feed

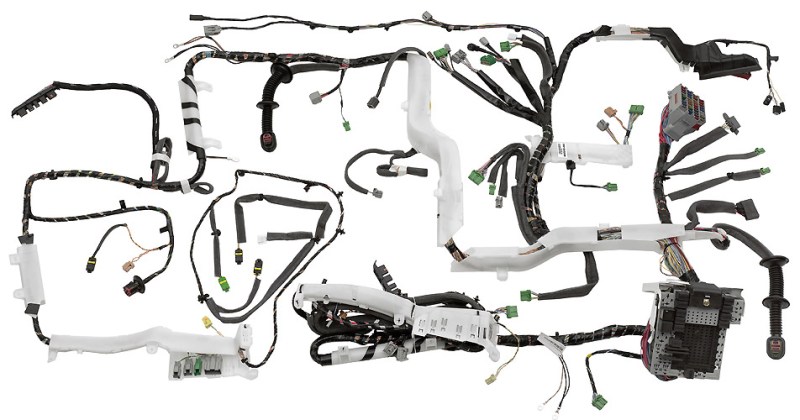

There are many ways to learn, but few to none of them compare to that of spending time standing over the shoulder of a master of the craft. This awesome page sent in by [JohnU] is a fantastic corner of the internet that lets us all peek over that shoulder to see someone who’s not only spent decades learning the art of of creating cable harnesses, but has taken the time to distill some of that vast experience for the rest of us to benefit from.

This page is focused on custom automotive and motorcycle modifications, but it’s absolutely jam-packed with things applicable in so many areas. It points out how often automotive wiring is somewhat taken for granted, but it shouldn’t be; there are hundreds of lines, all of which need to work for your car to run in hot and cold, wet and dry. The reliability of wiring is crucial not just for your car, but much larger things such as the 530 km (330 mi) of wiring inside an Airbus A380 which, while a large plane, is still well under 100 m in length.

This page doesn’t just talk about cable harnessing in the abstract; in fact, the overwhelming majority of it revolves around the practical and applicable. There is a deep dive into wiring selection, tubing and sealing selection, epoxy to stop corrosion, and more. It touches on many of the most common connectors used in vehicles, as well as connectors not commonly used in the automotive industry but that possess many of the same qualities. There are some real hidden gems in the midst of the 20,000+ word compendium, such as thermocouple wiring and some budget environmental sealing options.

There is far more to making a thing beyond selecting the right parts; how it’s assembled and the tools used are just as important. This page touches on tooling, technique, and planning for a wire harness build-up. While there are some highly specialized tools identified, there are also things such as re-purposed knitting needles. Once a harness is fully assembled it’s not complete, as there is also a need for testing that must take place which is also touched on here.

Thanks to [JohnU] for sending in this incredible learning resource. If this has captured your attention like it has ours, be sure to check out some of the other wire harness tips we’ve featured!

From Blog – Hackaday via this RSS feed

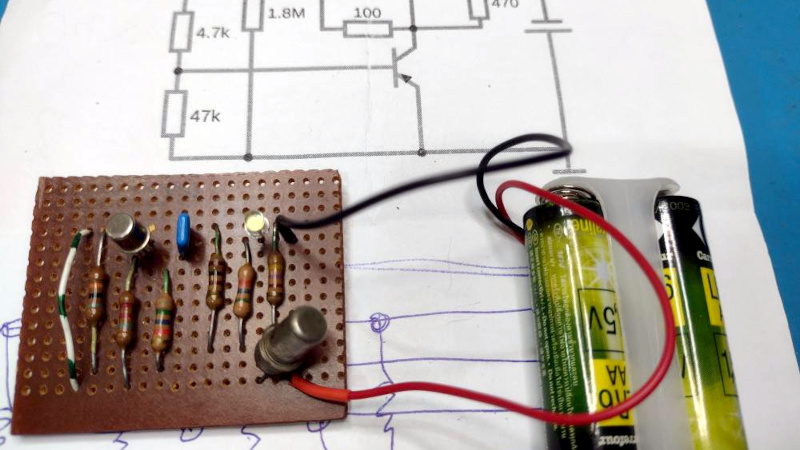

If you’ve worked with germanium transistors, you’ll know that many of them have a disappointingly low maximum frequency of operation. This has more to do with some of the popular ones dating from the earliest years of the transistor age than it does to germanium being inherently a low frequency semiconductor, but it’s fair to say you won’t be using an OC71 in a high frequency RF application. It’s clear that [Ken Yap]’s project is taking no chances though, because he’s using a vintage germanium transistor at a very low frequency — 1 Hz, to be exact.

The circuit is a simple enough phase shift oscillator that flashes a white LED, in which a two transistor amplifier feeds back on itself through an RC phase shift network. The germanium part is a CV7001, while the other transistor is more modern but still pretty old these days silicon part, a BC109. The phase shift network has a higher value resistor than you might expect at 1.8 MOhms, because of the low frequency of operation. Power meanwhile comes from a pair of AA cells.

We like this project not least for its use of very period passive components and stripboard to accompany the vintage semiconductor parts. Perhaps it won’t met atomic standards for timing, but that’s hardly the point.

This project is an entry in the 2025 One Hertz Challenge. Why not enter your own second-accurate project?

From Blog – Hackaday via this RSS feed

One of the tropes of the space race back in the 1960s, which helped justify the spending for the part of the public who thought it wasn’t worth it, was that the technology developed for use in space would help us out here back on earth. The same goes for the astronomical expenses in Formula 1, or even on more pedestrian tech like racing bikes or cinematography cameras. The idea is that the boundaries pushed out in the most extreme situations could nonetheless teach us something applicable to everyday life.

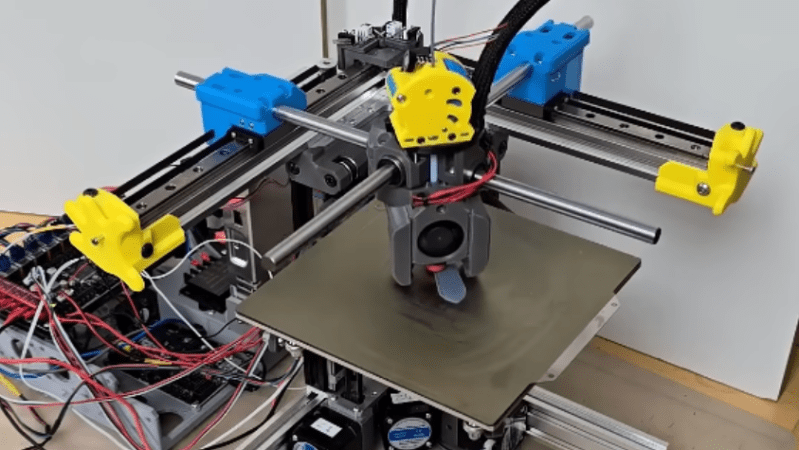

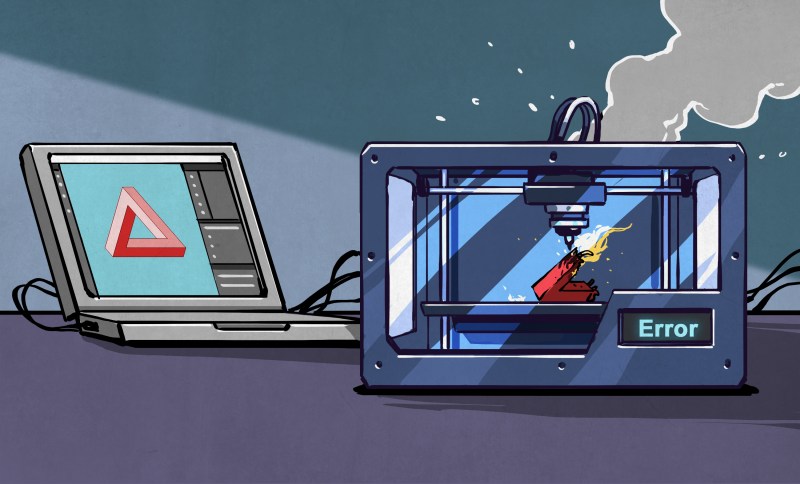

This week, we saw another update from the Minuteman project, which is by itself entirely ridiculous – a 3D printer that aims to print a 3D Benchy in a minute or less. Of course, the Minuteman isn’t alone in this absurd goal: there’s an entire 3D printer enthusiast community that is pushing the speed boundaries of this particular benchmark print, and times below five minutes are competitive these days, although with admittedly varying quality. (For reference, on my printer, a decent-looking Benchy takes about half an hour, but I’m after high quality rather than high speed.)

One could totally be forgiven for scoffing at the Speed Benchy goal in general, the Minuteman, or even The 100, another machine that trades off print volume for extreme speed. But there is definitely trickle-down for the normal printers among us. After all, pressure advance used to be an exotic feature that only people who were using high-end homemade rigs used to care about, and now it’s gone mainstream. Who knows if the Minuteman’s variable temperature or rate smoothing, or the rigid and damped frames of The 100, or its successor The 250, will make normal printers better.

So here’s to the oddball machines, that push boundaries in possibly ridiculous directions, but then share their learnings with those of us who only need to print kinda-fast, but who like to print other things than little plastic boats that don’t even really float. At least in the open-source hardware community, trickle-down is very real.

This article is part of the Hackaday.com newsletter, delivered every seven days for each of the last 200+ weeks. It also includes our favorite articles from the last seven days that you can see on the web version of the newsletter. Want this type of article to hit your inbox every Friday morning? You should sign up!

From Blog – Hackaday via this RSS feed

Sometimes, all you need to make something work is to come at it from a different angle from anyone else — flip the problem on its head, so to speak. That’s what [Keizo Ishibashi] did to create his Cantareel, a modified guitar that actually sounds like a hurdy-gurdy.

We wrote recently about a maker’s quest to create just such a hybrid instrument, and why it ended in failure: pressing strings onto the fretboard also pushed them tighter to the wheel, ruining the all-important tension. To recap, the spinning wheel of a hurdy-gurdy excites the strings exactly like a violin bow, and like a violin bow, the pressure has to be just right. There’s no evidence [Keizo Ishibashi] was aware of that work, but he solved the problem regardless, simply by thinking outside the box — the soundbox, that is.

Unlike a hurdy-gurdy, the Cantareel keeps its wheel outside the soundbox. The wheel also does not rub directly upon the strings: instead, it turns what appears to be a pair of o-rings. Each rosined o-ring bows 2 of the guitar’s strings, giving four strings a’ singing. (Five golden rings can only be assumed.) The outer two strings of this ex-six-string are used to hold the wheel assembly in place by feeding through holes on the mounting arms. The guitar is otherwise unmodified, making this hack reversible.

It differs from the classic hurdy-gurdy in one particular: on the Cantareel, every string is a drone string. There’s no way to keep the rubber rings from rubbing against the strings, so all four are always singing. This may just be the price you pay to get that smooth gurdy sound out of a guitar form factor. We’re not even sure it’s right to call it a price when it sounds this good.

Thanks to [Petitefromage] for the tip. If you run into any wild and wonderful instruments, don’t forget to let us know.

From Blog – Hackaday via this RSS feed

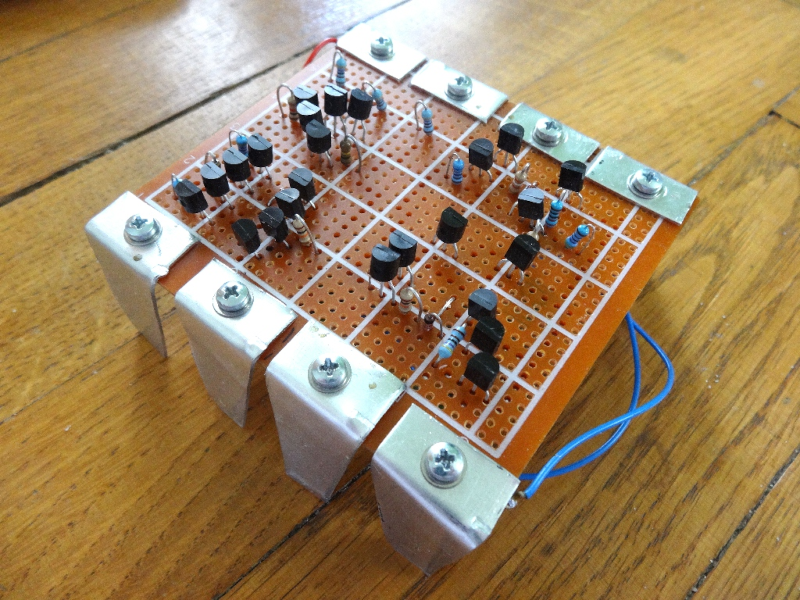

Few electronic ICs can claim to be as famous as the 555 timer. Maybe part of the reason is that the IC doesn’t have a specific function. It has a lot of building blocks that you can use to create timers and many other kinds of circuits. Now [Stoppi] has decided to make a 555 out of discrete components. The resulting IC, as you can see in the video below, won’t win any prizes for diminutive size. But it is fun to see all the circuitry laid bare at the macro level.

The reality is that the chip doesn’t have much inside. There’s a transistor to discharge the external capacitor, a current source, two comparators, and an RS flip flop. All the hundreds of circuits you can build with those rely on how they are wired together along with a few external components.

Even on [stoppi]’s page, you can find how to wire the device to be monostable, stable, or generate tones. You can also find circuits to do several time delays. A versatile chip now blown up as big as you are likely to ever need it.

Practical? Probably not, unless you need a 555 with some kind of custom modification. But for understanding the 555, there’s not much like it.

We’ve seen macro 555s before. It is amazing how many things you can do with a 555. Seriously.

From Blog – Hackaday via this RSS feed

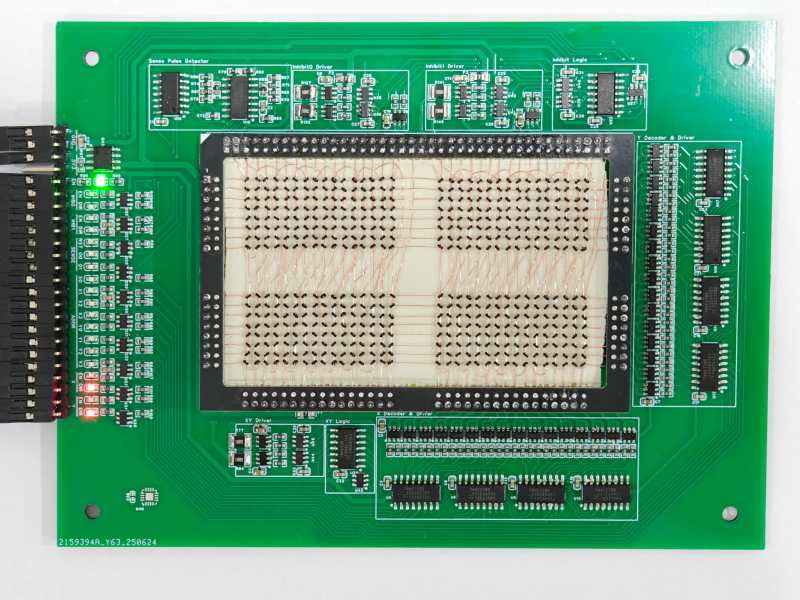

Magnetic Core memory was the RAM at the heart of many computer systems through the 1970s, and is undergoing something of a resurgence today since it is easiest form of memory for an enterprising hacker to DIY. [Han] has an excellent writeup that goes deep in the best-practices of how to wire up core memory, that pairs with his 512-bit MagneticCoreMemoryController on GitHub.

Magnetic core memory works by storing data inside the magnetic flux of a ferrite ‘core’. Magnetize it in one direction, you have a 1; the other is a 0. Sensing is current-based, and erases the existing value, requiring a read-rewrite circuit. You want the gory details? Check out [Han]’s writeup; he explains it better than we can, complete with how to wire the ferrites and oscilloscope traces to explain why you want to wiring them that way. It may be the most complete design brief to be written about magnetic core memory to be written this decade.

This little memory pack [Han] built with this information is rock-solid: it ran for 24 hours straight, undergoing multiple continuous memory tests — a total of several gigabytes of information, with zero errors. That was always the strength of ferrite memory, though, along with the fact you can lose power and keep your data. In in the retrocomputer world, 512 bits doesn’t seem like much, but it’s enough to play with. We’ve even featured smaller magnetic core modules, like the Core 64. (No prize if you guess how many bits that is.) One could be excused for considering them toys; in the old days, you’d have had cabinets full of these sorts of hand-wound memory cards.

Magnetic core memory should not be confused with core-rope memory, which was a ROM solution of similar vintage. The legendary Apollo Guidance Computer used both.

We’d love to see a hack that makes real use of these pre-modern memory modality– if you know of one, send in a tip.

From Blog – Hackaday via this RSS feed

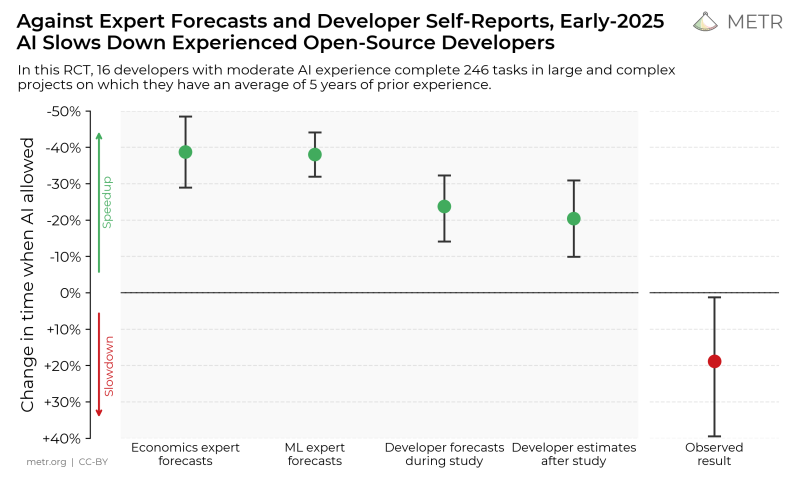

Recently AI risk and benefit evaluation company METR ran a randomized control test (RCT) on a gaggle of experienced open source developers to gain objective data on how the use of LLMs affects their productivity. Their findings were that using LLM-based tools like Cursor Pro with Claude 3.5/3.7 Sonnet reduced productivity by about 19%, with the full study by [Joel Becker] et al. available as PDF.

This study was also intended to establish a methodology to assess the impact from introducing LLM-based tools in software development. In the RCT, 16 experienced open source software developers were given 246 tasks, after which their effective performance was evaluated.

A large focus of the methodology was on creating realistic scenarios instead of using canned benchmarks. This included adding features to code, bug fixes and refactoring, much as they would do in the work on their respective open source projects. The observed increase in the time it took to complete tasks with the LLM’s assistance was found to be likely due to a range of factors, including over-optimism about the LLM tool capabilities, LLMs interfering with existing knowledge on the codebase, poor LLM performance on large codebases, low reliability of the generated code and the LLM doing very poorly on using tactic knowledge and context.

Although METR suggests that this poor showing may improve over time, it seems fair to argue whether LLM coding tools are at all a useful coding partner.

From Blog – Hackaday via this RSS feed

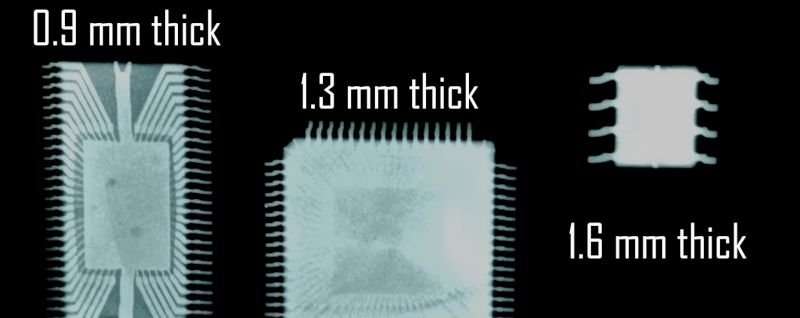

Who doesn’t want an X-ray machine? But you need a special tube and super high voltage, right? [Project 326] says no, and produces a USB-powered device that uses a tube you can pick up two for a dollar. You might guess the machine doesn’t generate X-rays with a lot of energy, and you’d be right. But you can make up for it with long exposure times. Check out the video below, with host [Posh Arthur].

The video admits there are limitations, of course. We were somewhat sad that [Project 326] elected not to share the exact parts list and 3D printed files because in the unlikely event someone managed to hurt themselves with it, there could be a hysterical reaction. We agreed, though, that if you are smart enough to handle this, you’ll be smart enough to figure out how to duplicate it — it doesn’t look that hard, and there are plenty of not-so-subtle clues in the video.

The video points out that you can buy used X-ray tube for about $100, but then you need a 70kV power supply. A 1Z11 tube diode has the same basic internal structure, but isn’t optimized for the purpose. But it does emit X-rays as a natural byproduct of its operation, especially with filament voltage.

The high voltage supply needs to supply at least 1mA at about 20 kV. Part of the problem is that with low X-ray emission, you’ll need long exposure times and, thus, a power supply needs to be able to operate for an extended period. We wondered if you could reduce the duty cycle, which might make the exposure time even longer, but should be easier on the power supply.

The device features a wired remote, allowing for a slight distance between the user and the hot tube. USB power is supplied through a USB-C PD device, which provides a higher voltage. In this case, the project utilizes 20V, which is distributed to two DC-DC converters: one to supply the high-voltage anode and another to drive the filament.

To get the image, he’s using self-developing X-ray film made for dental use. It is relatively sensitive and inexpensive (about a dollar a shot). There are also some lead blocks to reduce stray X-ray emission. Many commercial machines are completely enclosed and we think you could do that with this one, if you wanted to.

You need something that will lie flat on the film. How long did it take? A leaf image needed a 50-minute exposure. Some small ICs took 16 hours! Good thing the film is cheap because you have to experiment to get the exposure correct.

This really makes us want to puzzle out the design and build one, too. If you do, please be careful. This project has a lot to not recommend it: high voltage, X-rays, and lead. If you laugh at danger and want a proper machine, you can build one of those, too.

From Blog – Hackaday via this RSS feed

Over on YouTube, [Technology Connections] has a new video: Induction lamps: fluorescent lighting’s final form.

This video is about a wireless fluorescent light which uses induction to transfer power from the electrical system into the lamp. As this lamp doesn’t require wiring it is not prone to “sputtering” as typical fluorescent lights are, thus improving the working life by an order of magnitude. As explained in the video sputtering is the process where the electrodes in a typical fluorescent lamp lose their material over time until they lose their ability to emit electrons at all.

This particular lamp has a power rating of 200 W and light output of 16,000 lumens, which is quite good. But the truly remarkable thing about this type of lighting is its service life. As the lamp is simply a phosphor-coated tube filled with argon gas and a pellet of mercury amalgam it has a theoretically unlimited lifespan. Or let’s call it 23 years.

Given that the service life is so good, why don’t we see induction lamps everywhere? The answer is that the electronics to support them are very expensive, and these days LED lighting has trounced every lighting technology that we’ve ever made in terms of energy efficiency, quality of light, and so on. So induction lamps are obsolete before they ever had their day. Still pretty interesting technology though!

Thanks to [Keith Olson] for writing in about this one.

From Blog – Hackaday via this RSS feed

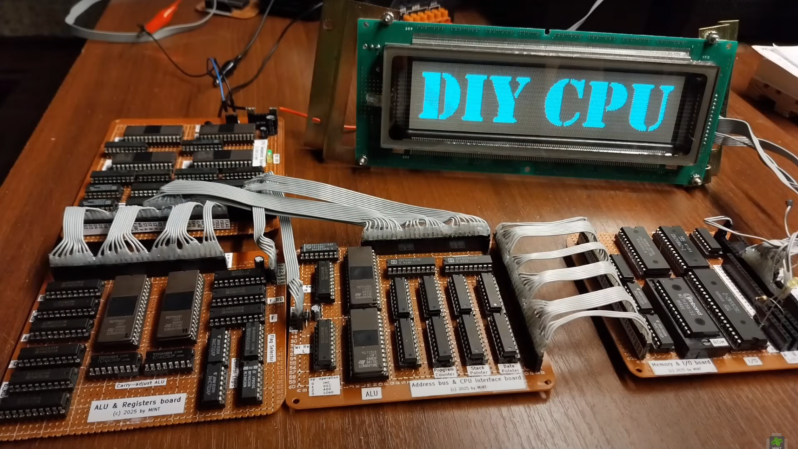

Building a simple 8-bit computer is a great way to understand computing fundamentals, but there’s only so much you can learn by building a system around an existing processor. If you want to learn more, you’ll have to go further and build the CPU yourself, as [MINT] demonstrated with his EPROMINT project (video in Polish, but with English subtitles).

The CPU began when [MINT] began experimenting with uses for his collection of old memory chips, and quickly realized that they could do quite a bit more than store data. After building a development board for single-chip based programmable logic, he decided to build a full CPU out of (E)EPROMs. The resulting circuit spans four large pieces of perfboard, weighs in at over half a kilogram, and took several weeks of soldering to create.

The star of the system is the ALU, which runs an instruction set inspired by the Z80, but with some optimizations and added features. In particular, it has new operations for multiplication, division, bitstream operations, more advanced bit shifting, and a wide range of mathematical functions, including exponents, roots, and trigonometric functions. [MINT] documented all of this in a nicely-formatted offline booklet, available under the project’s GitHub repository. It’s currently only possible to program for the CPU using opcodes or a custom flavor of assembly, but there are plans to write a C compiler for it.

Even without being able to write in a higher-level language than assembly, [MINT] was able to drive a VFD screen with the EPROMINT, which he used to display some clips from The Matrix. This provided an opportunity to demonstrate basic debugging methods, which involved dumping and analyzing the memory contents after a failed program execution.

Using memory chips as programmable logic gates is an interesting hack, and we’ve seen Lisp programs written to make this easier. Of course, this isn’t the first CPU we’ve seen built without any chips intended for logic operations.

Thanks to [Piotr] for the tip!

From Blog – Hackaday via this RSS feed

[Zac] of Zac Builds has a shameful secret: he, a fully grown man, plays video games. Shocking, we know, but such people do exist in our society. After being rightfully laughed out of the family living room, [Zac] relocated his indecent activities to his office, but he knew that was not enough. Someone might enter, might see his secret shame: his PlayStation 5. He decided the only solution was to tear the game console apart, and rebuild it inside of his desk.

All sarcasm aside, it’s hard to argue that [Zac]’s handmade wooden desk doesn’t look better than the stock PS5, even if you’re not one of the people who disliked Sony’s styling this generation. The desk also contains his PC, a project we seem to have somehow missed; the two machines live in adjacent drawers.

While aesthetics are a big motivator behind this case mod, [Zac] also takes the time to improve on Sony’s work: the noisy stock fan is replaced by three silent-running Noctua case fans; the easy-to-confuse power and eject buttons are relocated and differentiated; and the Blu-ray drive gets a proper affordance so he’ll never miss the slot again. An NVMe SSD finishes off the upgrades.

Aside from the woodworking to create the drawer, this project relies mostly on 3D printing for custom mounts and baffles to hold the PS5’s parts and direct airflow where it needs to go. This was made much, much easier for [Zac] via the use of a 3D scanner. If you haven’t used one, this project demonstrates how handy they can be — and also some of the limitations, as the structured-light device (a Creality Raptor) had trouble with the shinier parts of the build. Dealing with that trouble still saved [Zac] a lot of time and effort compared to measuring everything.

While we missed [Zac]’s desk build, we’ve seen his work before: everything from a modernized iPod to wooden sound diffusion panels.

From Blog – Hackaday via this RSS feed

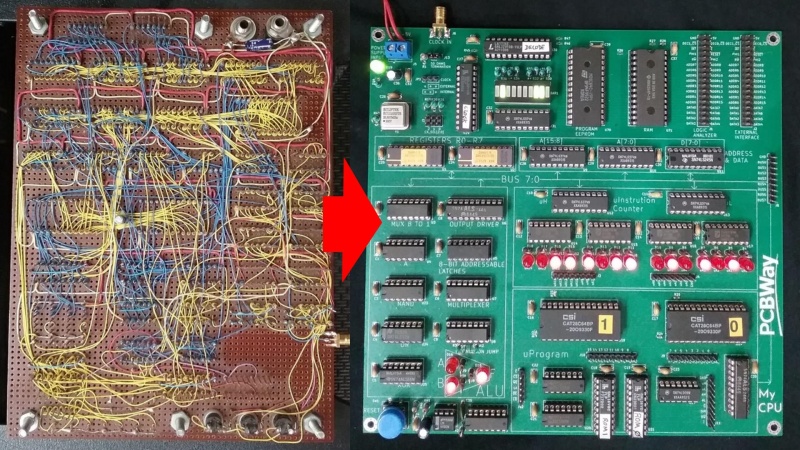

[Sylvain Fortin] recently wrote in to tell us about his Homebrew CPU Project, and the story behind this one is truly remarkable.

He began working on this toy CPU back in 1994, over thirty years ago. After learning about the 74LS181 ALU in college he decided to build his own CPU. He made considerable progress back in the 90s and then shelved the project until the pandemic hit when he picked it back up again and started adding some new features. A little later on, a board house approached him with an offer to cover the production cost if he’d like to redo the wire-wrapped project on a PCB. The resulting KiCad files are in the GitHub repository for anyone who wants to play along at home.

An early prototype on breadboard

An early prototype on breadboard

The ALU on [Sylvain]’s CPU is a 1-bit ALU which he describes as essentially a selectable gate: OR, XOR, AND, NOT. It requires more clock steps to compute something like an addition, but, he tells us, it’s much more challenging and interesting to manage at the microcode level. On his project page you will find various support software written in C#, such as an op-code assembler and a microcode assembler, among other things.

For debugging [Sylvain] started out with das blinkin LEDs but found them too limiting in short order. He was able to upgrade to a 136 channel Agilent 1670G Benchtop Logic Analyzer which he was fortunate to score for cheap on eBay. You can tell this thing is old from the floppy drive on the front panel but it is rocking 136 channels which is seriously OP.

The PCB version is a great improvement but we were interested in the initial wire-wrapped version too. We asked [Sylvain] for photos of the wire-wrapping and he obliged. There’s just something awesome about a wire-wrapped project, don’t you think? If you’re interested in wire-wrapping check out Wire Wrap 101.

From Blog – Hackaday via this RSS feed

My first encounter with C++ was way back in the 1990s, when it was one of the Real Programming Languages that I sometimes heard about as I was still splashing about in the kiddie pool with Visual Basic, PHP and JavaScript. The first formally standardized version of C++ is the ISO 1998 standard, but it had been making headways as a ‘better C’ for decades at that point since Bjarne Stroustrup added that increment operator to C in 1979 and released C++ to the public in 1985.

that I sometimes heard about as I was still splashing about in the kiddie pool with Visual Basic, PHP and JavaScript. The first formally standardized version of C++ is the ISO 1998 standard, but it had been making headways as a ‘better C’ for decades at that point since Bjarne Stroustrup added that increment operator to C in 1979 and released C++ to the public in 1985.

Why did I pick C++ as my primary programming language? Mainly because it was well supported and with free tooling: a free Borland compiler or g++ on the GCC side. Alternatives like VB, Java, and D felt far too niche compared to established languages, while C++ gave you access to the lingua franca of C while adding many modern features like OOP and a more streamlined syntax in addition to the Standard Template Library (STL) with gobs of useful building blocks.

Years later, as a grizzled senior C++ developer, I have come to embrace the notion that being good at a programming language also means having strong opinions on all that is wrong with the language. True to form, while C++ has many good points, there are still major warts and many heavily neglected aspects that get me and other C++ developers riled up.

Why We Fell In Love

Cover of the third edition of The C++ Programming Language by Bjarne Stroustrup.

Cover of the third edition of The C++ Programming Language by Bjarne Stroustrup.

What frightened me about C++ initially was just how big and scary it seemed, with gargantuan IDEs like Microsoft’s Visual Studio, complex build systems, and graphical user interface that seemed to require black magic far beyond my tiny brain’s comprehension. Although using the pure C-based Win32 API does indeed require ritual virgin sacrifices, and Windows developers only talk about MFC when put under extreme duress, the truth is that C++ itself is rather simple, and writing complex applications is easy once you break it down into steps. For me the breakthrough came after buying a copy of Stroustrup’s The C++ Programming Language, specifically the third edition that covered the C++98 standard.

More than just a reference, it laid out clearly for me not only how to write basic C++ programs, but also how to structure my code and projects, as well as the reasonings behind each of these aspects. For many years this book was my go-to resource, as I developed my rudimentary, scripting language-afflicted skills into something more robust.

Probably the best part about C++ is its flexibility. It never limits you to a single programming paradigm, while it gives you the freedom to pick the desire path of your choice. Although an astounding number of poor choices can be made here, with a modicum of care and research you do not have to end up hoisted with your own petard. Straying into the C-compatibility part of C++ is especially fraught with hazards, but that’s why we have the C++ bits so that we don’t have to touch those.

Reflecting With C++11

It would take until 2011 for the first major update to the C++ standard, by which time I had been using C++ mostly for increasingly more elaborate hobby projects. But then I got tossed into a number of commercial C and C++ projects that would put my burgeoning skills to the test. Around this time I found the first major items in C++ that are truly vexing.

Common issues like header-include order and link order, which can lead to circular dependencies, are some of such truly delightful aspects. The former is mostly caused by the rather simplistic way that header files are just slapped straight into the source code by the preprocessor. Like in C, the preprocessor simply looks at your #include "widget/foo.h" and replaces it with the contents of foo.h with absolutely no consideration for side effects and cases of spontaneous combustion.

Along the way, further preprocessor statements further mangle the code in happy-fun ways, which is why the GCC g++ and compatible compilers like Clang have the -E flag to only run the preprocessor so that you can inspect the preprocessed barf that was going to be sent to the compiler prior to it violently exploding. The trauma suffered here is why I heartily agree with Mr. Stroustrup that the preprocessor is basically evil and should only be used for the most basic stuff like includes, very simple constants and selective compilation. Never try to be cute or smart with the preprocessor or whoever inherits your codebase will find you.

If you got your code’s architectural issues and header includes sorted out, you’ll find that C++’s linker is just as dumb as that of C. After being handed the compiled object files and looking at the needed symbols, it’ll waddle into the list of libraries, look at each one in order and happily ignore previously seen symbols if they’re needed later. You’ll suffer for this with tools like ldd and readelf as you try to determine whether you are just dense, the linker is dense or both are having buoyancy issues.

These points alone are pretty traumatic, but you learn to cope with them like you cope with a gaggle of definitely teething babies a few rows behind you on that transatlantic flight. The worst part is probably that neither C++11 nor subsequent standards have addressed either to any noticeable degree, with a shift from C-style compile units to Ada-like modules probably never going to happen.

The ‘modules at home‘ feature introduced with C++20 are effectively just limited C-style headers without the preprocessor baggage, without the dependency analysis and other features that make languages like Ada such a joy to build code with.

Non-Deterministic Initialization

Although C++ and C++11 in particular removes a lot of undefined behavior that C is infamous for, there are still many parts where expected behavior is effectively random or at least platform-specific. One such example is that of static initialization, officially known as the Static initialization order fiasco. Essentially what it means is that you cannot count on a variable declared static to be initialized during general initialization between different compile units.

This also affects the same compile units when you are initializing a static std::map instance with data during initialization, as I learned the hard way during a project when I saw random segmentation faults on start-up related to the static data structure instance. The executive summary here is that you should not assume that anything has been implicitly initialized during application startup, and instead you should do explicit initialization for such static structures.

An example of this can be found in my NymphRPC project, in which I used this same solution to prevent initialization crashes. This involves explicitly creating the static map rather than praying that it gets created in time:

static map<UInt32, NymphMethod*> &methodsIdsStatic = NymphRemoteClient::methodsIds();

With the methodsIds() function:

map<UInt32, NymphMethod*>& NymphRemoteClient::methodsIds() { static map<UInt32, NymphMethod*>* methodsIdsStatic = new map<UInt32, NymphMethod*>(); return *methodsIdsStatic; }

It are these kind of niggles along with the earlier covered build-time issues that tend to sap a lot of time during development until you learn to recognize them in advance along with fixes.

Fading Love

Don’t get me wrong, I still think that C++ is a good programming language at its core, it is just that it has those rough spots and sharp edges that you wish weren’t there. There is also the lack of improvements to some rather fundamental aspects in the STL, such as the unloved C++ string library. Compared to Ada standard library strings, the C++ STL string API is very barebones, with a lot of string manipulation requiring writing the same tedious code over and over as convenience functions are apparently on nobody’s wish list.

One good thing that C++11 brought to the STL was multi-tasking support, with threads, mutexes and so on finally natively available. It’s just a shame that its condition variables are plagued by spurious wake-ups and a more complicated syntax than necessary. This gets even worse with the Filesystem library that got added in C++17. Although it’s nice to have more than just basic file I/O in C++ by default, it is based on the library in Boost, which uses a coding style, type encapsulation obsession, and abuse of namespaces that you apparently either love or hate.

I personally have found the POCO C++ libraries to be infinitely easier to use, with a relatively easy to follow implementation. I even used the POCO libraries for the NPoco project, which adapts the code to microcontroller use and adds FreeRTOS support.

Finally, there are some core language changes that I fundamentally disagree with, such as the addition of type inference with the auto keyword outside of templates, which is a weakly typed feature. As if it wasn’t bad enough to have the chaos of mixed explicit and implicit type casting, now we fully put our faith into the compiler, pray nobody updates code elsewhere that may cause explosions later on, and remove any type-related cues that could be useful to a developer reading the code.

But at least we got constexpr, which is probably incredibly useful to people who use C++ for academic dissertations rather than actual programming.

Hope For The Future

I’ll probably keep using C++ for the foreseeable future, while grumbling about all of ’em whippersnappers adding useless things that nobody was asking for. Since the general take on adding new features to C++ is that you need to do all the legwork yourself – like getting into the C++ working groups to promote your feature(s) – it’s very likely that few actually needed features will make it into new C++ standards, as those of us who are actually using the language are too busy doing things like writing production code in it, while simultaneously being completely disinterested in working group politics.

Fortunately there is excellent backward compatibility in C++, so those of us in the trenches can keep using the language any way we like along with all the patches we wrote to ease the pains. It’s just sad that there’s now such a split forming between C++ developers and C++ academics.

It’s one of the reasons why I have felt increasingly motivated over the past years to seek out other languages, with Ada being one of my favorites. Unlike C++, it doesn’t have the aforementioned build-time issues, and while its super-strong type system makes getting started with writing the business logic slower, it prevents so many issues later on, along with its universal runtime bounds checking. It’s not often that using a programming language makes me feel something approaching joy.

Giving up on a programming language with which you quite literally grew up is hard, but as in any relationship you have to be honest about any issues, no matter whether it’s you or the programming language. That said, maybe some relationship counseling will patch things up again in the future, with us developers are once again involved in the language’s development.

From Blog – Hackaday via this RSS feed

This week, Hackaday’s Elliot Williams and Kristina Panos joined forces to bring you the latest news, mystery sound, and of course, a big bunch of hacks from the previous week.

In Hackaday news, the One Hertz Challenge ticks on. You have until Tuesday, August 19th to show us what you’ve got, so head over to Hackaday.IO and get started now! In other news, we’ve just wrapped the call for Supercon proposals, so you can probably expect to see tickets for sale fairly soon.

On What’s That Sound, Kristina actually got this one with some prodding. Congratulations to [Alex] who knew exactly what it was and wins a limited edition Hackaday Podcast t-shirt!

After that, it’s on to the hacks and such, beginning with a ridiculously fast Benchy. We take a look at a bunch of awesome 3D prints a PEZ blaster and a cowbell that rings true. Then we explore chisanbop, which is not actually K-Pop for toddlers, as well as a couple of clocks. Finally, we talk a bit about dithering before taking a look at the top tech of 1985 as shown in Back to the Future (1985).

Check out the links below if you want to follow along, and as always, tell us what you think about this episode in the comments!

Download in DRM-free MP3 and savor at your leisure.

Where to Follow Hackaday Podcast

Places to follow Hackaday podcasts:

iTunesSpotifyStitcherRSSYouTubeCheck out our Libsyn landing page

Episode 328 Show Notes:

News:

Announcing The 2025 Hackaday One Hertz Challenge

What’s that Sound?

Congratulations to [Alex] for knowing it was the Scientist NPC from Half-Life.

Interesting Hacks of the Week:

Managing Temperatures For Ultrafast Benchy Printing 3D-Printed Parts Don’t Slow Down This Speedy PrinterWhen Is A Synth A Woodwind? When It’s A PneumatoneBudget Brilliance: DHO800 Function Generator Android-Powered Rigol Scopes Go WirelessI Gotta Print More CowbellKids Vs Computers: Chisanbop RememberedPez Blaster Shoots Candy Dangerously Fast Hackaday Supercon 2024: Lightning Talks – YouTube

Quick Hacks:

Elliot’s Picks: IR Point And Shoot Has A Raspberry Heart In A 35mm BodyTurning PET Plastic Into Paracetamol With This One Bacterial TrickFive-minute(ish) Beanie Is The Fastest We’ve Seen YetKristina’s Picks: CIS-4 Is A Monkish Clock Inside A Ceiling Lamp3D Printer Turbo-Charges A Vintage VehicleShadow Clock Shows The Time On The Wall

Can’t-Miss Articles:

Dithering With Quantization To Smooth Things OverBack To The Future, 40 Years Old, Looks Like The Past

From Blog – Hackaday via this RSS feed

[Tom] has taken a DIY approach to smart sailing with a Raspberry Pi as the back end to the navigation desk on his catamaran, the SeaHorse. Tucked away neatly in a waterproof box with a silicone gasket, he keeps the single board computer safe from circuit-destroying salt water. Keeping a board sealed up so tightly also means that it can get a little too warm. Because of this he under-clocks the CPU so that it generates less heat. This also has the added benefit of saving on power which is always good when you aren’t connected to the grid for long stretches of time.

A pair of obsolescent phones and a repurposed laptop screen provide display surfaces for his navdesk. With these screens he has weather forecasts, maps, GPS, depth, speed over ground — all the data from all the onboard instruments a sailor could want to stream through a boat’s WiFi network — at his fingertips.

There’s much to be done still. Among other things, he’s added a software defined radio to the Pi to integrate radio monitoring into the system, and he’s started experimenting with reprogramming a buoy transmitter, originally designed for tracking fishing nets, so that it can transmit his boat’s location, speed and heading instead.

The software that ties much of this system together is the open source navigational platform OpenCPN which, with its support for third-party plugins, looks like a great choice for experimenting with new gadgets like fishing net buoy transmitters.

For more nautical computing fun check out this open source shipboard computer, and this data-harvesting, Arduino-driven buoy.

Thanks to [Andrew Sheldon] for floating this one our way.

From Blog – Hackaday via this RSS feed

@jack is back with a weekend project. Yes, that Jack. [Jack Dorsey] spent last weekend learning about Bluetooth meshing, and built Bitchat, a BLE mesh encrypted messaging application. It uses X25519 for key exchange, and AES-GCM for message encryption. [Alex Radocea] took a look at the current state of the project, suspects it was vibe coded, and points out a glaring problem with the cryptography.

So let’s take a quick look at the authentication and encryption layer of Bitchat. The whitepaper is useful, but still leaves out some of the important details, like how the identity key is tied to the encryption keys. The problem here is that it isn’t.

Bitchat has, by necessity, a trust-on-first-use authentication model. There is intentionally no authentication central authority to verify the keys of any given user, and the application hasn’t yet added an out-of-band authentication method, like scanning QR codes. Instead, it has a favorites system, where the user can mark a remote user as a favorite, and the app saves those keys forever. There isn’t necessarily anything wrong with this approach, especially if users understand the limitations.

The other quirk is that Bitchat uses ephemeral keys for each chat session, in an effort to have some forward secrecy. In modern protocols, it’s desirable to have some protection against a single compromised encryption key exposing all the messages in the chain. It appears that Bitchat accomplishes this by generating dedicated encryption keys for each new chat session. But those ephemeral keys aren’t properly verified. In fact, they aren’t verified by a user’s identity key at all!

The attack then, is to send a private message to another user, present the public key of whoever your’re trying to impersonate, and include new ephemeral encryption keys. Even if your target has this remote user marked as a favorite, the new encryption keys are trusted. So the victim thinks this is a conversation with a trusted person, and it’s actually a conversation with an attacker. Not great.

Now when you read the write-up, you’ll notice it ends with [Alex] opening an issue on the Bitchat GitHub repository, asking how to do security reports. The issue was closed without comment, and that’s about the end of the write-up. It is worth pointing out that the issue has been re-opened, and updated with some guidance on how to report flaws.

Post-Quantum Scanning

There’s a deadline coming. Depending on where you land on the quantum computing skepticism scale, it’s either the end of cryptography as we know it, or a pipe dream that’s always going to be about 10 years away. My suspicion happens to be that keeping qubits in sync is a hard problem in much the same way that factoring large numbers is a hard problem. But I don’t recommend basing your cryptography on that hunch.

Governments around the world are less skeptical of the quantum computer future, and have set specific deadlines to migrate away from quantum-vulnerable algorithms. The issue here is that finding all those uses of “vulnerable” algorithms is quite the challenge. TLS, SSH, and many more protocols support a wide range of cryptography schemes, and only a few are considered Post Quantum Cryptography (PQC).

Anvil Secure has seen this issue, and released an Open Source tool to help. Pqcscan is a simple idea: Scan a list of targets and collect their supported cryptography via an SSH and TLS scan. At the end, the tool generates a simple report of how many of the endpoints support PQC. This sort of compliance is usually no fun, but having some decent tools certainly helps.

Citrixbleed 2

Citrix devices have a problem. Again. The nickname for this particular issue is CitrixBleed 2, which hearkens all the way back to Heartbleed. The “bleed” here refers to an attack that leaks little bits of memory to attackers. We know that it’s related to an endpoint called doAuthentication.do.

The folks at Horizon3 have a bit more detail, and it’s a memory management issue, where structures are left pointing to arbitrary memory locations. The important thing is that an incomplete login message is received, the code leaks 127 bytes of memory at a time.

What makes this vulnerability particularly bad is that Citrix didn’t share any signs of attempted exploitation. Researchers have evidence of this vulnerability being used in the wild back to July 1st. That’s particularly a problem because the memory leak is capable of revealing session keys, allowing for further exploitation. Amazingly, in an email with Ars Technica, Citrix still refused to admit that the flaw was being used in the wild.

Opossum

We have a new TLS attack, and it’s a really interesting approach. The Opossum Attack is a Man in the Middle (MitM) attack that takes advantage of of opportunistic TLS. This TLS upgrade approach isn’t widely seen outside of something like email protocols, where the StartTLS command is used. The important point here is that these connections allow a connection to be initiated using the plaintext protocol, and then upgrade to a TLS protocol.

The Opossum attack happens when an attacker in a MitM position intercepts a new TCP connection bound for a TLS-only port. The attacker then initiates a plaintext connection to that remote resource, using the opportunistic port. The attacker can then issue the command to start a TLS upgrade, and like an old-time telephone operator, patch the victim through to the opportunistic port with the session already in progress.

The good news is that this attack doesn’t result in encryption compromise. The basic guarantees of TLS remain. The problem is that there is now a mismatch between exactly how the server and client expect the connection to behave. There is also some opportunity for the attacker to poison that connection before the TLS upgrade takes place.

TSAs

AMD has announced yet another new Transient Execution attack, the Transient Scheduler Attack. The AMD PDF has a bit of information about this new approach. The newly discovered leak primitive is the timing of CPU instructions, as instruction load timings may be affected by speculative execution.

The mitigation for this attack is similar to others. AMD recommends running the VERW instruction when transitioning between Kernel and user code. The information leakage is not between threads, and so far appears to be inaccessible from within a web browser, cutting down the real-world exploitability of this new speculative execution attack significantly.

Bits and Bytes

The majority of McDonald’s franchises uses the McHire platform for hiring employees, because of course it’s called “McHire”. This platform uses AI to help applicants work through the application process, but the issues found weren’t prompt injection or anything to do with AI. In this case, it was a simple default username and password 123456:123456 that gave access to a test instance of the platform. No real personal data, but plenty of clues to how the system worked: an accessible API used a simple incrementing ID, and no authentication to protect data. So step backwards through all 64 million applications, and all that entered data was available to peruse. Yikes! The test credentials were pulled less than two hours after disclosure, which is an impressive turn-around to fix.

When you’ve been hit by a ransomware attack, it may seem like the criminals on the other side are untouchable. But once again, international law enforcement have made arrests of high-profile ransomeware gangs. This time it’s members of Scattered Spider that were arrested in the UK.

And finally, the MCP protocol is once again making security news. As quickly as the world of AI is changing, it’s not terribly surprising that bugs and vulnerabilities are being discovered in this very new code. This time it’s mcp-remote, which can be coerced to run arbitrary code when connecting to a malicious MCP server. Connect to server, pop calc.exe. Done.

From Blog – Hackaday via this RSS feed

We’re all used to crystal resonators — they provide pretty accurate frequency references for oscillators with low enough drift for most of our purposes. As the quartz equivalent of a tuning fork, they work by vibrating at their physical resonant frequency, which means that just like a tuning fork, it should be possible to listen to them.

A crystal in the MHz might be difficult to listen to, but for a 32,768 Hz watch crystal it’s possible with a standard microphone and sound card. [SimonArchipoff] has written a piece of software that graphs the frequency of a watch crystal oscillator, to enable small adjustments to be made for timekeeping.

Assuming a microphone and sound card that aren’t too awful, it should be easy enough to listen to the oscillation, so the challenge lies in keeping accurate time. The frequency is compared to the sound card clock which is by no means perfect, but the trick lies in using the operating system clock to calibrate that. This master clock can be measured against online NTP sources, and can thus become a known quantity.

We think of quartz clocks as pretty good, but he points out how little it takes to cause a significant drift over month-scale timings. if your quartz clock’s accuracy is important to you, perhaps you should give it a look. You might need it for your time reference.

Header: Multicherry, CC BY-SA 4.0.

From Blog – Hackaday via this RSS feed

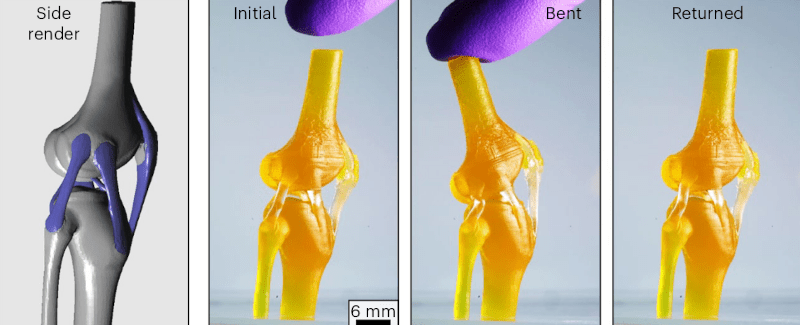

Using light to 3D print liquid resins is hardly a new idea. But researchers at the University of Texas at Austin want to double down on the idea. Specifically, they use a resin with different physical properties when cured using different wavelengths of light.

Natural constructions like bone and cartilage inspired the researchers. With violet light, the resin cures into a rubbery material. However, ultraviolet light produces a rigid cured material. Many of their test prints are bio-analogs, unsurprisingly.

Even more importantly, the resin materials connect naturally, so you don’t have as much worry about a piece made with two materials delaminating at the interface. You can control the exact properties by shifting the light frequency one way or another. We read the actual paper, but it wasn’t clear to us if, after curing in a rubbery state, the part could still cure hard in, for example, sunlight. The paper is available in Nature Materials, but if you don’t have a subscription, try your local library or University.

Maybe just the thing for that tunable laser project. Of course, you can use multicolor FDM printers with two types of filaments. You only need to convert the model over.

From Blog – Hackaday via this RSS feed

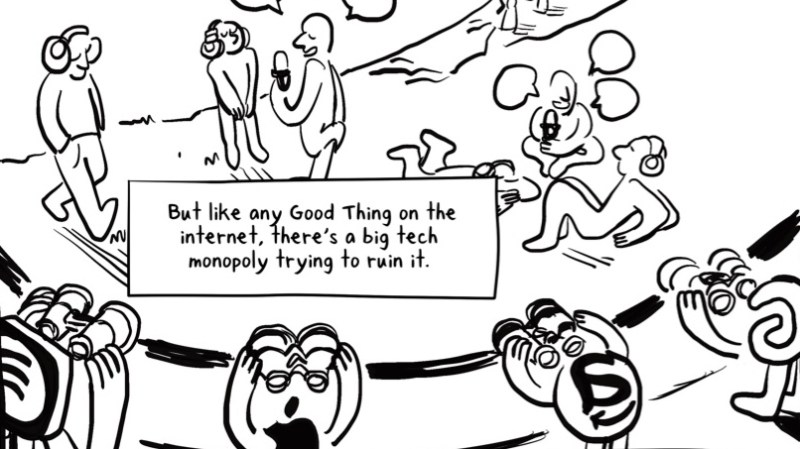

While we know that many of you are reading Hackaday via our Really Simple Syndication (RSS) feed, we suspect that most people on the street wouldn’t know that it underlies a lot of the modern internet. [A. McNamee] and [A. Service] have created an illustrated history of RSS that proudly proclaims RSS is (not) dead (yet)!

While tens of millions of users used Google Reader before it was shut down, social media and search companies have tried to squeeze independent blogs and websites for an increasingly large part of their revenue, making it more and more difficult to exist outside the walled gardens of Facebook, Apple, Google, etc. Despite those of you that remember, RSS has been mostly forgotten.

RSS has been the backbone of the podcast industry, however, quietly serving feeds to millions of users everywhere with few of them aware that an open protocol from the 90s was serving up their content. As with every other corner of the internet where money could be made, corporate raiders have come to scoop up creators and skim the profits for themselves. Spotify has been the most egregious actor here, but the usual suspects of Apple, Google, and Amazon are also making plays to enclose the podcast commons.

If you’d like to learn more about how big tech is sucking the life out of the internet (and possibly how to reverse the enshittification) check out Cory Doctorow’s keynote from our very own Supercon.

From Blog – Hackaday via this RSS feed

Immutable distributions are slowly spreading across the Linux world– but should you care? Are they hacker friendly? What does “immutable” mean, anyway?

Immutable means “not subject or susceptible to change” according to Merriam-Webster, which is not 100% accurate in this context, but it’s close enough and the name is there so we’re stuck with it. Immutable distributions are subject to change, it’s just that how you change them is quite a bit different than bog-standard Linux. Will this matter to you? Read on to find out! (Or, if you know the answers already, read on to find out how angry you should be in the comments section.)

Immutability is cloud-based thinking: the system has a known-good state, and it’s always in it. Everything that is not part of the core system is containerized and controlled. I’m writing this from a KDE-based distribution called Aurora, part of the Universal Blue project that builds on Fedora’s Atomic Desktop work. It bills itself as being for “lazy developers”.

Immutability is cloud-based thinking: the system has a known-good state, and it’s always in it. Everything that is not part of the core system is containerized and controlled. I’m writing this from a KDE-based distribution called Aurora, part of the Universal Blue project that builds on Fedora’s Atomic Desktop work. It bills itself as being for “lazy developers”.

The advantage to this hypothetical lazy dev is that the base system is already built, and you can’t get distracted messing around with it. It works, and it isn’t at all likely to break. Every installation is essentially identical to every other installation, which means reproducibility is all but guaranteed. No more faffing about arguing on forums to figure out which library is conflicting with which. In an immutable system, they’ve all been selected to play well together, and anything else is safely containerized. (Again, a cloud ideal.) If the devs make a mistake during an update, well, just roll back!

50 Shades of Immunability

The different flavours of immutable linux differ in how they accomplish that, but all have rollbacks as a basic capability. Each change to the system becomes a new, indivisible image; that’s why we talk about atomic updates. You create a new system image when you update, but you don’t start using it until you reboot the system. (This has some advantages to stability, as you might imagine, although the rebooting can get old.) The old image is maintained on your system, just in case you happen to need it.

MicroOS and its descendants (like Aeon) use a system based on BRTFS snapshots to provide rollbacks. Fedora’s atomic desktops, like Silverblue, and the Universal Blue downstreams that are based on Fedora like Bazzite or Aurora use a system called OSTree, which is considerably more complex and more interesting. You can do something similar with Nix, of course, but that is a whole other kettle of fish.

MicroOS and its descendants (like Aeon) use a system based on BRTFS snapshots to provide rollbacks. Fedora’s atomic desktops, like Silverblue, and the Universal Blue downstreams that are based on Fedora like Bazzite or Aurora use a system called OSTree, which is considerably more complex and more interesting. You can do something similar with Nix, of course, but that is a whole other kettle of fish.

OSTree bills itself as “Git for operating system binaries”. Every update, or every package installed is layered onto the tree and can be rolled back if needed– en masse, or individually. You can package up that tree of commits, and deploy it onto a new system, making devising new “distros” so trivial they don’t really deserve the name. In theory, you can install everything via OSTree, but the further you take your system from the base image, the less you have that “every system is identical” easy-problem-solving that the immutable guys like to talk about.

Of course you do want to install applications, and you do it the same way you might on a server: in containers. What sort of containers can vary by taste, but typically that means Flatpak for GUI applications. Fedora-based immutable distributions like Silverblue or Aurora use Flatpak, as does OpenSuse. (AppImage and snap are also options, technically speaking, but who likes snaps?) The Universal Blue team adds in Homebrew for those terminal applications that don’t tend to get Flatpaks. I admit that I was surprised at first to see Homebrew when I started using Aurora, since I knew it as “the missing package manager for MacOS” but its inclusion makes perfect sense when you think about it.

MacOS is the First Immutable UNIX

MacOS, you see, is the first immutable UNIX. As much as we in the Linux community don’t like to talk about it, Macs aren’t just POSIX compatible– they run Certified UNIX( ). And Curputino has been moving towards this “immutable” thing for a long time, until Catalina finally sealed the system folders away completely on a read-only volume. Updates for MacOS also come as snapshots to replace that system volume– you could certainly call them “atomic”. Since the system volume is locked down, traditional package managers won’t be able operate. Homebrew was created to solve that problem. It works just as well on a Linux system that has the same lockdown applied to its system folders.

). And Curputino has been moving towards this “immutable” thing for a long time, until Catalina finally sealed the system folders away completely on a read-only volume. Updates for MacOS also come as snapshots to replace that system volume– you could certainly call them “atomic”. Since the system volume is locked down, traditional package managers won’t be able operate. Homebrew was created to solve that problem. It works just as well on a Linux system that has the same lockdown applied to its system folders.

If Homebrew isn’t your cup of tea – and it seems to not be everyone’s, since I think Universal Blue is the only distro set to ship with it – you can go more hard-core into containerization with docker or podman. Somewhere in between, you could use something like Distrobox. If you haven’t heard of it, Distrobox is a framework for deploying traditional linux systems inside containers. For devs, it’s great for testing, even if you aren’t basing it on top of an immutable distribution. If you’ve never worked in the cloud, this may all sound like rube-goldberg gobbbly-gook, (“linux in a box on my linux!?”) but once you adapt to it, it’s not so bad.

The Year of Immutable on the Desktop?

The question is: do you want to adapt to it? Is cloud-based thinking necessary on the desktop? Well I’d say it depends on who is using the desktop. I would absolutely steer Windows users who are thinking of switching to Linux in the wake of the Windows 10 EOL to a Universal Blue distribution, and probably Aurora since KDE is more windows-y than Gnome. Most of those ex-Windows users are people who just want to use a computer, not play with it. If that describes you, then maybe an immutable distribution could be to your liking.

MacOS has shown that very few desktop users will ever notice if they can access the system folders or not; they are most interested in having a stable, reproducible environment to work in. Thus, immutable Linux may be the way to bring Linux mainstream – certainly Steam thinks so, with SteamOS. For their use case, it’s hard to argue the benefits: you need a stable base system for the stack of cards that is gaming on Linux, and tech support is much simplified for a locked-down operating system that you cannot install packages on. The rising popularity of Bazzite, Universal Blue’s gaming-centric distribution, also speaks to this.

There are downsides to this kind of system, of course, and it is important to recognize that. Some people really, really hate containerization because Flatpaks, and other similar options, use more memory, both on disk and in RAM. Of course not everything is available as a Flatpak, or on Homebrew if the system uses that. If you want to use Toolbox or Distrobox to get a distro-specific set of packages, well, of course running a whole extra Linux system in a container is going to have overhead.

From an aesthetic perspective, it’s not as elegant as a traditional Linux environment, at least to some eyes, mine included. Those of us who switched to Linux because we wanted absolute control over our computers might not feel too great about the “do not touch” label implicitly scrawled across the system folders, even if we do get something like rpm-ostree to make changes with. Even with a package manager, there are customizations and tweaks you simply cannot make on a read-only system. For those of us who treat Linux as a hobby, that’s probably a no-go.

For the “Lazy Developer” Aurora sells itself to, well, that’s perhaps a different story. Speaking of lazy, I’ve been using Aurora for a few months now, almost in spite of myself. I initially loaded it as the last step on a distro-hopping jaunt to see if I could find a good Windows 10 replacement for my parents. (I think this is it, to be honest.) It’s still on my main laptop simply because it’s so unobtrusively out of the way that I can think of no reason to install anything else.

At some point that may change, and when it does I might just overcorrect and do a Linux From Scratch build or try out like NixOS like I’ve been meaning to. Something like that would let me regain the sense of agency I have forfeited to the Universal Blue dev team while running Aurora. (There have been times where I can feel the ghostly hand of an imaginary sysadmin urging me not to mess with my own system.)

At some point that may change, and when it does I might just overcorrect and do a Linux From Scratch build or try out like NixOS like I’ve been meaning to. Something like that would let me regain the sense of agency I have forfeited to the Universal Blue dev team while running Aurora. (There have been times where I can feel the ghostly hand of an imaginary sysadmin urging me not to mess with my own system.)

After seeing how well containerization can work on desktop, Nix looks extra appealing – it can do most of what this article talks about with the immutable distros, but without trusting configuration of any facet of the system to anyone else. What do you think? Are the touted benefits to stability, reproducibility, and security worth the hassle of an immutable distribution? Is the grass greener in the land of Nix? If you’ve tried one of the immutable Linux distributions out there, we’d love to hear what you think in the comments.

From Blog – Hackaday via this RSS feed

If you’re looking for a long journey into the wonderful world of instrument hacking, [Arty Farty Guitars] is six parts into a seven part series on hacking an existing guitar into a guitar-hurdy-gurdy-hybrid, and it is “a trip” as the youths once said. The first video is embedded below.

The Hurdy-Gurdy is a wheeled instrument from medieval europe, which you may have heard of, given the existence of the laser-cut nerdy-gurdy, the electronic midi-gurdy we covered here, and the digi-gurdy which seems to be a hybrid of the two. In case you haven’t seen one before, the general format is for a hurdy-gurdy is this : a wheel rubs against the strings, causing them to vibrate via sliding friction, providing a sound not entirely unlike an upset violin. A keyboard on the neck of the instrument provides both fretting and press the strings onto the wheel to create sound.

[Arty Farty Guitars] is a guitar guy, so he didn’t like the part with about the keyboard. He wanted to have a Hurdy Gurdy with a guitar fretboard. It turns out that that is a lot easier said than done, even when starting with an existing guitar instead of from scratch, and [Arty Farty Guitar] takes us through all of the challenges, failures and injuries incurred along the way.

Probably the most interesting piece of the puzzle is the the cranking/keying assembly that allows one hand to control cranking the wheel AND act as keyboard for pressing strings into the wheel. It’s key to the whole build, as combining those functions on the lower hand leaves the other hand free to use the guitar fretboard half of the instrument. That controller gets its day in video five of the series. It might inspire some to start thinking about chorded computer inputs– scrolling and typing?

If you watch up to the sixth video, you learn that that the guitar’s fretting action is ultimately incompatible with pressing strings against the wheel at the precise, constant tension needed for good sound. To salvage the project he had to switch from a bowing action with a TPU-surfaced wheel to a sort of plectrum wheel, creating an instrument similar to the thousand-pick guitar we saw last year.

Even though [Arty Farty Guitars] isn’t sure this hybrid instrument can really be called a Hurdy Gurdy anymore, now that it isn’t using a bowing action, we can’t help but admire the hacking spirit that set him on this journey. We look forward to the promised concert in the upcoming 7th video, once he figures out how to play this thing nicely.

Know of any other hacked-together instruments that possibly should not exist? We’re always listening for tips.

From Blog – Hackaday via this RSS feed

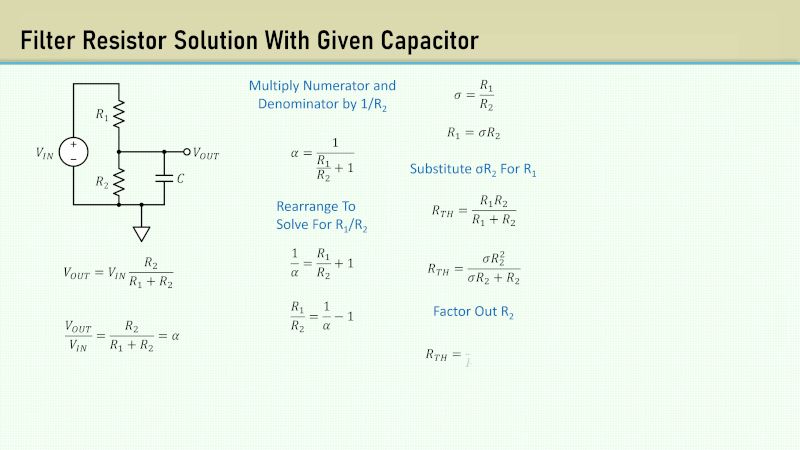

When we do textbook analysis, we tend to ignore the real-world concerns for the sake of learning. So, a typical theoretical voltage divider is simply two resistors. But if you examine a low-pass RC filter, you’ll see a single resistor and a capacitor. What if you combine them? That’s what [Old Hack EE] did in a recent video, and you can check it out below.

It helps if you are familiar with Thevenin equivalents and, of course, Ohm’s Law. There’s also a bit of algebra, but nothing too complicated. The example design has a lossy filter at 100 Hz.

Of course, RC filters are easy to understand if you think of them as voltage dividers with a frequency-variable resistance, which is what the math is basically saying. The load impedance, in this case, is R2 in parallel with Xc at a given frequency.

He mentions that you might find a circuit like this in a power supply. However, it is also common to see this circuit wherever a divider drives a load with capacitance or even parasitic capacitance in cables or circuit boards.

We’ve discussed Thevenin equivalence modeling before. If you want really good filters, you are probably going to need op-amps.

From Blog – Hackaday via this RSS feed