I'm just a hobbyist in this topic, but I would like to share my experience with using local LLMs for very specific generative tasks.

Predefined formats

With prefixes, we can essentially add the start of the LLMs response, without it actually generating it. Like, when We want it to Respond with bullet points, we can set the prefix to be - (A dash and space). If we want JSON, we can use ` ` `json\n{ as a prefix, to make it think that it started a JSON markdown code block.

If you want a specific order in which the JSON is written, you can set the prefix to something like this:

` ` `json

{

"first_key":

Translation

Let's say you want to translate a given text. Normally you would prompt a model like this

Translate this text into German:

` ` `plaintext

[The text here]

` ` `

Respond with only the translation!

Or maybe you would instruct it to respond using JSON, which may work a bit better. But what if it gets the JSON key wrong? What if it adds a little ramble infront or after the translation? That's where prefixes come in!

You can leave the promopt exactly as is, maybe instructing it to respond in JSON

Respond in this JSON format:

{"translation":"Your translation here"}

Now, you can pretend that the LLM already responded with part of the message, which I will call a prefix. The prefix for this specific usecase could be this:

{

"translation":"

Now the model thinks that it already wrote these tokens, and it will continue the message from right where it thinks it left off.

The LLM might generate something like this:

Es ist ein wunderbarer Tag!"

}

To get the complete message, simply combine the prefix and the generated text to result in this:

{

"translation":"Es ist ein wunderschöner Tag!"

}

To minimize inference costs, you can add "} and "\n} as stop tokens, to stop the generation right after it finished the json entrie.

Code completion and generation

What if you have an LLM which didn't train on code completion tokens? We can get a similar effect to the trained tokens using an instruction and a prefix!

The prompt might be something like this

` ` `python

[the code here]

` ` `

Look at the given code and continue it in a sensible and reasonable way.

For example, if I started writing an if statement,

determine if an else statement makes sense, and add that.

And the prefix would then be the start of a code block and the given code like this

` ` `python

[the code here]

This way, the LLM thinks it already rewrote everything you did, but it will now try to complete what it has written. We can then add \n` ` ` as a stop token to make it only generate code and nothing else.

This approach for code generation may be more desireable, as we can tune its completion using the prompt, like telling it to use certain code conventions.

Simply giving the model a prefix of ` ` `python\n Makes it start generating code immediately, without any preamble. Again, adding the stop keyword \n` ` ` makes sure that no postamble is generated.

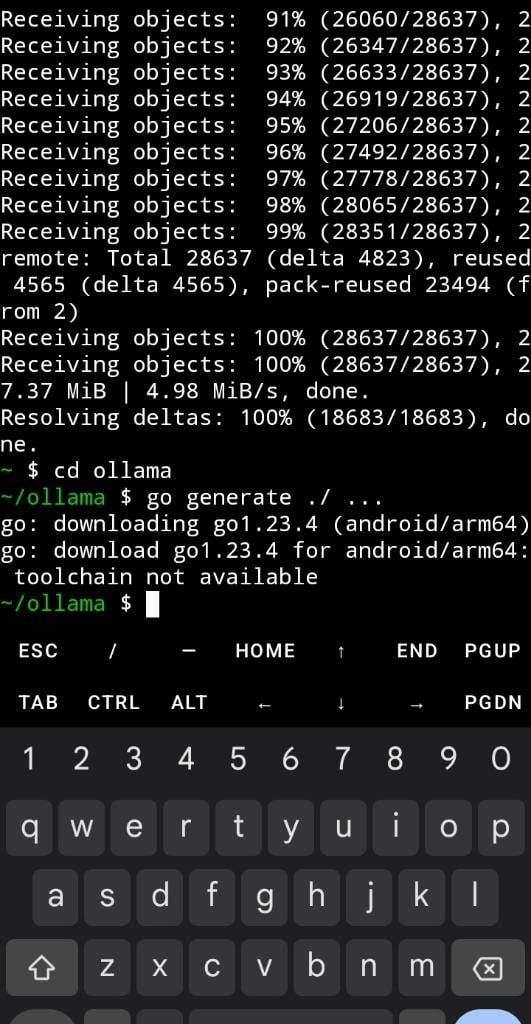

Using this in ollama

Using this "technique" in ollama is very simple, but you must use the /api/chat endpoint and cannot use /api/generate. Simply append the start of a message to the conversation passed to the model like this:

"conversation":[

{"role":"user", "content":"Why is the sky blue?"},

{"role":"assistant", "content":"The sky is blue because of"}

]

It's that simple! Now the model will complete the message with the prefix you gave it as "content".

Be aware!

There is one pitfall I have noticed with this. You have to be aware of what the prefix gets tokenized to. Because we are manually setting the start of the message ourselves, it might not be optimally tokenized. That means, that this might confuse the LLM and generate one too many or few spaces. This is mostly not an issue though, as

What do you think? Have you used prefixes in your generations before?