I have no idea if anyone on Lemmy is into Avatar lore/fanfiction, but in the spirit of posting content here instead of Reddit... here goes.

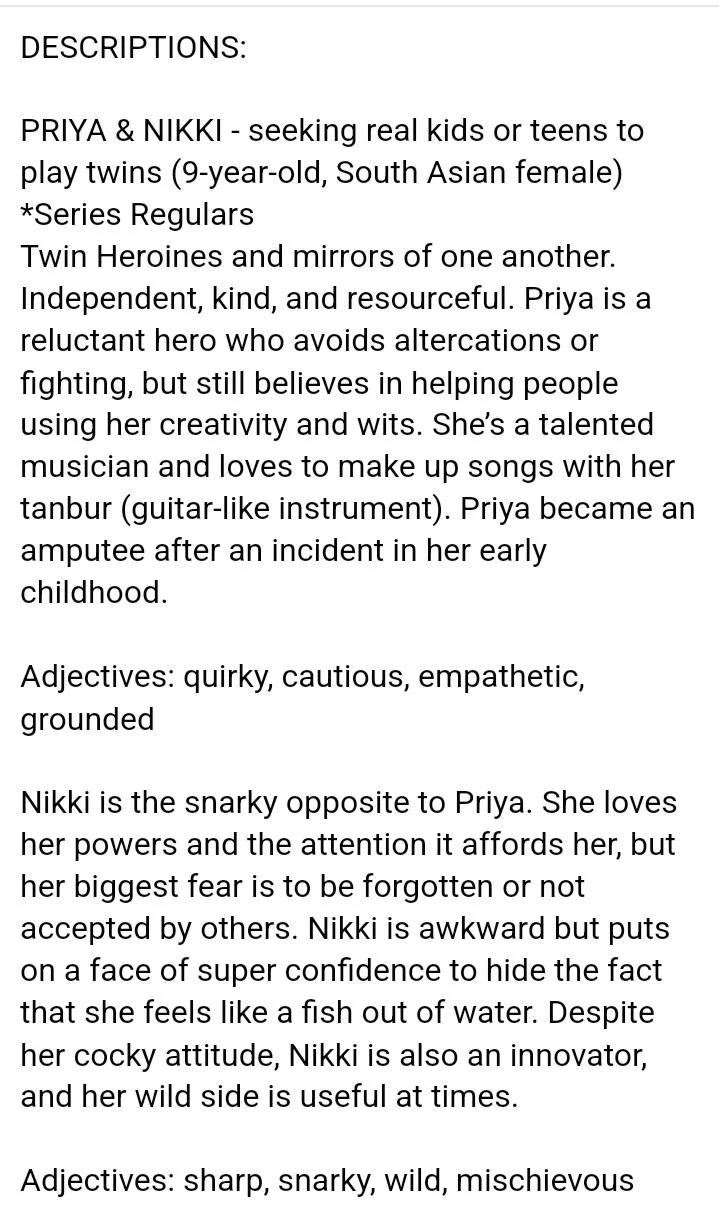

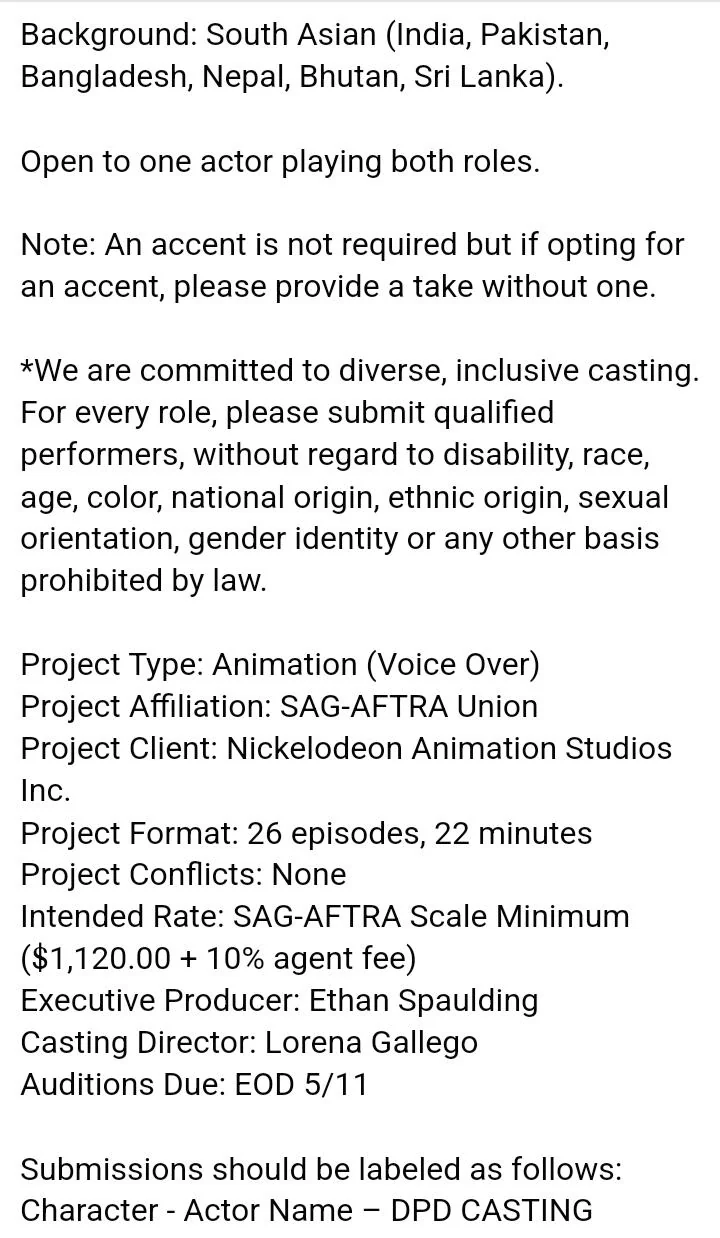

A new Avatar series featuring 'twin' Avatars has been leaked, in case you missed it:

https://knightedgemedia.com/2024/12/avatar-seven-havens-twin-earth-avatar-series-will-initially-be-26-episodes-long/

https://lemmy.world/post/23427458

In a nutshell, its allegedly set in a cataclysmic world overrun by spirit vines, and two twins are the 'Avatars' with diametric personalities. Not much is known beyond that, but I've been brainstorming some post-LoK ideas forever.

And now I kinda feel like writing them out. Here's my thought dump for a story:

In the (largely undepicted) three years of canon Korra Alone, Korra (traveling anonymously) makes a stop on Kyoshi Island hoping to reconnect with her spirit. Instead, she meets a humble blacksmith with a bird spirit on his shoulder, and connects with him. They both wrestle with the demons haunting them, and they discover secrets on the island from Kyoshi's era.

Korra dies in 190 AG (at 37), already weakened from her metal poisoning, saving the world froma a cataclysm that leaves much of the world overgrown.

Intially, the story jumps between this period in Korra Alone (174AG) and 206 AG, where Asami Sato is struggles to steer Future Industries in a world dominated by megacorps in the safe 'havens' dotted through the world. While Kyoshi Island has barely changed at all, the 'future' thread has a more cyberpunk feel. Chi based cybernetics are commonplace, but the more augmented someone is, the more their bending is compromised, and bender vs nonbender tensions flare up once more. The world outside the safe havens is a dangerous wasteland. Tech derived from studying spirits has let to the proliferation of holograms, BCIs, and even primitive assistants and virtual environments, and advances in power storage/generation already seen in LoK mean everything is largely electric. Yet the world is still "analog," with tube radios and TVs, no digital electronics, and 'dumb' virtual assistants that are error-prone and incapable of math, giving it a retro feel. There are no guns, of course, but personal weapons like arc casters, flamethrowers, cryo blasters and such all mimic bending.

The Sei'naka clan has risen to power in the Fire Nation, taking advantage of the aftermath of the 100 Years War, the Red Lotus Insurrection, Future Industry's relative benevolence, and even the recently calamity. Now a ruthless corporation bigger than Future Industires, they dominate business and politics wherever they expand.

The White Lotus's search for the Avatar has failed. Asami rather infamously misidentified the Avatar... until one day, she find them.

Once this background is established, the story jumps back to our inseparable twin Avatars, Pavi and Nisha, born deep in the Foggy Swamp. Thanks to their predecessor, they live a harmonious, largely isolated life as members of the Foggy Swamp Tribe on the back of a water Lion Turtle. To her utter shock, they manage to manifest Korra at nine, withh Past Life Korra appearing as a nine-year-old. Dumbfounded, not even sure who the 'real' Avatar is, the girls assume she is just another spirit in the swamp. So Korra makes the decision to go along with this, and let them have a childhood she never had under the White Lotus as she figures out just what's going on with the twin Avatars.

Ultimately, the real world comes crashing into the new Avatars' isolated life, and they react poorly to Korra telling them the truth at 16. Through some more disasters and tragedies, they end up on the streets of Republic City, separated for a time, before meeting friends. The rest of the story revolves around corporate and personal greed (very much like the real wo9rld), conflict (and synergy) between the environment/spirits and technology, rivalries, family, friends across lifetimes, the nature of consciousness, reincarnation and the soul, and a conspiracy going all the way back to Kuruk threading through everything.

Some character profiles I'm working on:

-

Priya: Independent, kind, and resourceful. Priya is a reluctant hero who avoids altercations or fighting, but still believes in helping people using her creativity and wits. She's a talented musician and loves to make up songs with her taanbur (guitar-like instrument). Priya lost most of her leg in an accident from a fallen tree that killed her parents in the Foggy Swamp, but bends roots and muddy water as if they were her own limb. Its eventually revealed that she carries Raava. Once she finds out, Priya in particular is reluctant to accept her role as the Avatar, until a tragedy forces her hand.

-

Nikki is more snarky. She loves her powers and the attention it affords her, but her biggest fear is to be forgotten or not accepted by others. Nikki is awkward but puts on a face of superior confidence to hide the fact that she feels like a fish out of water. Despite her cocky attitude, Nikki is also an innovator, and her wild side is useful at times. Like her sister, she's highly attuned to the swamp, able to connect to and even manifest the collective memories of lost loved ones by touching spirit vines in the swamp. Both are apparently waterbenders with a proclivity for mud. Its eventually revealed that she carries Vaatu inside her. Nikki is missing part of her arm, but bends as a replacement, much line Ming-Hua.

-

Korra: Largely as she is in LoK. Hot blooded, quick to fight, passionate, empathetic, and not very spiritual. In a reversal of roles, Priya and Nikki keep her manifested constantly, and Korra becomes their best friend, learing about thier life in the Foggy Swamp. Later in the story, Korra's almost like a Johnny Silverhand to the new Avatars: manifested at will, a voice constantly in thier heads offering commentary, occasionally butting heads with them in a complex but close and encouraging relationship.

-

Asami Sato: Largely as she was in LoK, driven, collected, strong, smart, loyal. Now she's fifty, with a cybernetic leg from an accident. Asami still altruistic, and retained control of Future Industries through the years, but struggles with pushback from a corporate world driven by expansion and greed, and ultimately has to grapple with some of what her own company has done under her nose.

-

Mako: Largely as he was, brooding, cool, a noir-like detective. Recently retired as police chief, and has been secretly piecing together the conspiracy running through the plot.

-

Ren: The blacksmith Korra meets on Kyoshi Island. Softspoken, painfully shy, air headed and ADD, stocky and green-eyed, Ren nonetheless has a dry wit. He's self depreciating to a fault, but has a soft heart. To Korra's utter shock, Ren is a metalbender and a lavabender, using the combination to effortlessly sculpt armor and weapons, and tinker with delicate electronics. He's terrified of lightning, with a massive scar covering his back that flares up in storms or when anxious. Almost as broken as Korra is at the start, Ren reveals that his father's ancestors were lavabending miners and blacksmiths in the Hundred Years War. His past is initially shrouded in mystery, but its slowly revealed that his mother is the scientist who originally conceived of spirit vine technology, and that Varrick only replicated some of her work. Ren's mom has an 'Oppenheimer moment' and defects from Kuvira's proto Earth Empire. Ren ends up as the only survivor, deeply scarred from a spirit vine "detonation" similar to the LoK finale, that fused his soul to his body, and he's hiding from warlords hunting him for what he knows. Through the story, he grows particularly close to Asami and Korra, and grapples with some of the technology he pioneers.

-

Kaida: CTO of Future Industries, Kaida is the biological daughter of Korra and Ren, who both died when she was 11. Utterly tenacious, hot-blooded, fearless, and a fierce fighter like her mom, Kaida barges into the story literally melting the metal floor in front of reporters harassing her 'mom,' Asami. Fiercely intelligent, impulsive, but with some of her dad's air-headedness, introversion, and love of tinkering with technology, Kaida is almost constantly clad in meteor-metal alloy plate armor she wears as a second skin. She favors a jian, like Korra learned to use on Kyoshi Island. Kaida a talented engineer, but struggles with the tremendous legacy she's been thrust into.

-

Yuri Sei'naka: One of many vying for supremacy in the Sei'naka family, Yuri resembles Azula; A charismatic leader with a ruthless streak, an obsession with perfection, and a fantastically talented firebender, she has Azula's the same sharp yellow eyes and features. Like her twin brother, Yoru, Yuri chose the 'hard' path of bending over advanced cybernetics the wealthy have access too. Nevertheless, she has a good moral compass, and is unconditionally loyal to her brother.. The siblings have an intense rivalry with Kaida, just as thier company rivals Future Industries.

-

Yoru Sei'naka: A firebending and lightning bending prodigy and a cunning strategist, Yoru is mute, having lost his ability to speak in a sparring accident as a kid. Yoru and Yuri are practically inseperable, with Yuri serving as his voice. Tasked with tracking down the unkown Avatar by the matriarch of the clan, and always beholden to his intense sense of honor, Yoru suffers through a tragic 'Zuko' arc through the story.

spoiler

-

Father Glowworm: The ancient spirit survived the death of Yun, and is an ever-present invisible hand through the story, albeit with a newfound distate for humans. The swamp, taboo spirit vine technology, and just how he tunnels between worlds will all tie into crises Priya and Nikki must navigate.

-

I'm still working on other antagonists, but there will be a warlord who tries to capture Ren on Kyoshi Island, a ruthless corporate matriarch of the Sei'naka dynasty (Natsu?), a charismatic rebel like something between Amon and Zaheer, and more. I'm also thinking on a blind airbending thief who rejected his rich family, and a loud, warm Sun Warrior whos people have resettled in Republic City, and an introverted netrunner-like hacker as companions for the Avatar.

Other thoughts:

-

I don't like some 'leaked' aspects of the upcoming show, like the twin Avatars being nine and the White Lotus being so involved and 'problematic.' I'd much rather have the twin Avatars be lost, ignorant of thier own nature in the Foggy Swamp because they appear to be waterbenders with a proclivity for mud.

-

On that note, stealing the idea from here, maybe Priya can only bend air and water, while Nikki can only bend earth and fire, reflecting the split of their spirits and personalities.

-

Remnants of the Northern and Southern Water Tribes have drifted to political extremes.

-

The 'wasteland' is populated by spirits, and human opportunists looking to brave it.

-

The Avatars' monkey cat companion is a spirit they befriended in the forest.

-

Spirit Vine technology is taboo and effectively 'lost' after the calamity.

-

The Avatars' Tribe lives atop a Lion Turtle the swamp hid for millenia.

-

The 'nature' of the Foggy Swamp is expanded. For instance, in one chapter, Priya and Nikki manifest and talk to respresentations of their parents, built from the collectively memory of everyone who ever knew them, all connected though vines. It brings up existential questions in Korra's head, and parallels with some of the spirit-based technology the rest of the world has developed.

...So, those are my scattered thoughts so far.

Does that sound like a sane, plausible base for a post-LoK story? Do you think any of it would fit into canon? I particularly like the idea of a 'metal lavabending' canon companion, and maybe some more futuristic elements in the havens that do exist.

And once again, Trump controlled the conversation, and all the actual evidence is out there window for most people.

It would be awesome if there was an unspoken “don’t feed the troll” understanding among journalists, influencers, mods, forum commenters, everyone. When Trump says something outrageously stupid, just… briefly acknowledge it, and then ignore it and proceed as usual. Like he doesn’t exist.

That’s a narcissist’s worst fear.

Sure, he'd bounce around in the conservative echo chamber, but at least he wouldn’t pull more people in.