Can humans think?

BB84

[...] reported a gross profit margin of 23.2% for the quarter, with an operating loss of 500 million yuan (70 million USD). Based on these figures, Xiaomi’s electric vehicle business posted an average loss of 6,500 yuan (903 USD) per vehicle in Q1 2025

How do you define dumping versus simply being unprofitable?

Have you tried 36875131794129999827197811565225474825492979968971970996283137471637224634055579, 154476802108746166441951315019919837485664325669565431700026634898253202035277999, 4373612677928697257861252602371390152816537558161613618621437993378423467772036 ?

There is a solution. It's just 80 digits long. I added it to the OP in the spoiler.

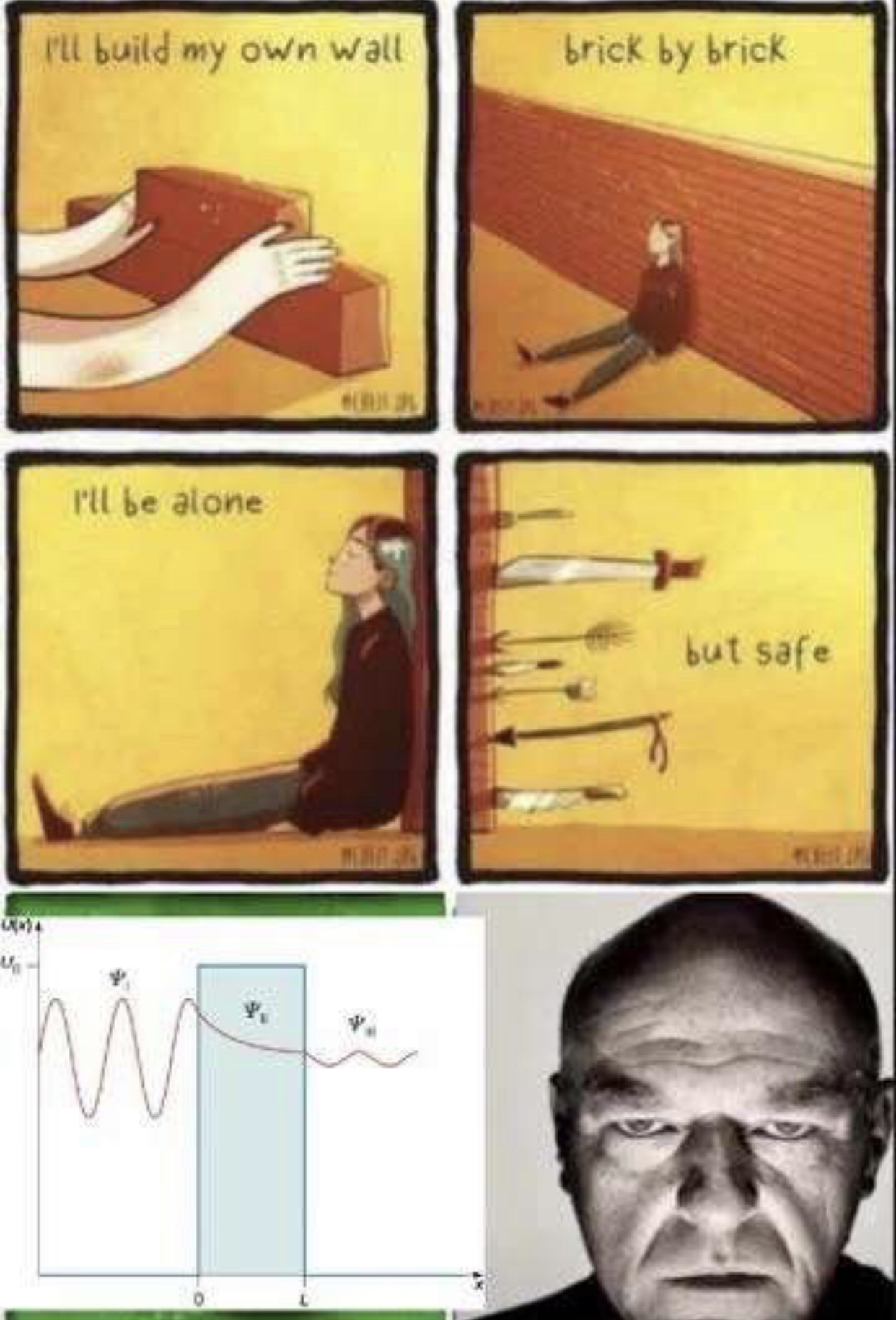

It's not just the narrow wavelength. Even with a perfectly monochromatic green light, your green receptors would activate a lot but your receptors for red and blue would still activate a bit. These researchers specifically target only the green receptors to activate (by literally shooting light at those receptors in particular), so for the first time ever your brain reads a pure green signal.

It's a math trick. Not a physical theory.

Scientists have came up with countless ways to fix the Hubble tension. But all these modified theories so far are either

- contrived

- untestable with present day observational instruments

- currently being tested

- already tested and deemed incompatible with reality.

Any linear relationship in this calculation would be an approximation. They're useful for intuition and quickly explaining things, but for actual business either the full nonlinear relationship is used, or if the linear approximation is used the approximation error must be bounded by an acceptably small parameter.

Lambda-CDM is fully aware of general relativity. Some people may try to explain it with nonrelativistic pictures to help you build intuition, but the actual theory and calculation is fully relativistic so you don't have to worry about that.

since we have 2 parameters to evaluate

I don't follow. What two parameters?

Yeah based on the graphical description in the linked post there probably are other solutions. But I don't think it would work for all integer.