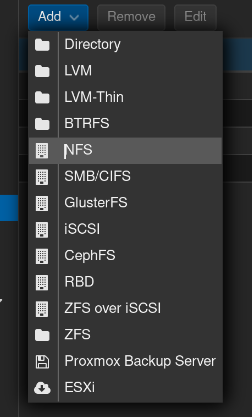

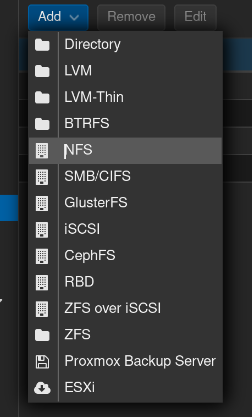

Proxmox does support NFS

But let's say that I would like to decommission my TrueNAS and thus having the storage exclusively on the 3-node server, how would I interlay Proxmox+Storage?

(Much appreciated btw)

Proxmox does support NFS

But let's say that I would like to decommission my TrueNAS and thus having the storage exclusively on the 3-node server, how would I interlay Proxmox+Storage?

(Much appreciated btw)

You are 100% right, I meant for the homelab as a whole. I do it for self-hosting purposes, but the journey is a hobby of mine.

So exploring more experimental technologies would be a plus for me.

Currently, most of the data in on a bare-metal TrueNAS.

Since the nodes will come with each 32TB of storage, this would be plenty for the foreseeable future (currently only using 20TB across everything).

The data should be available to Proxmox VMs (for their disk images) and selfhosted apps (mainly Nextcloud and Arr apps).

A bonus would be to have a quick/easy way to "mount" some volume to a Linux Desktop to do some file management.

I think I am on the same page.

I will provably keep Plex/Stash out of S3, but Nextckoud could be worth it? (1TB with lots of documents and medias).

How would you go for Plex/Stash storage?

Keeping it as a LVM in Proxmox?

Darn, Garage is the only one that I successfully deployed a test cluster.

I will dive more carefully into Ceph, the documentation is a bit heavy, but if the effort is worth it..

Thanks.

Is taking care of the environment back in vogue now that it might affect businesses' productivity?

~~Lien Mort.~~

~~Avons-nous une source alternative?~~

Ça fonctionne, désolé.

Thanks "big bad China" for forcing the rest of the world to move forward with more open AI (let's see if it materialises)

This infinite list of letters could be "any random combination of letters EXCEPT when that makes the word "banana". A subset of an infinite set can still be infinite.

Infinite != all possibilities

So far, they are training models extremely efficiently while having US gatekeeping their GPUs and doing everything they can to slow their progress. Any innovation in having efficient models to operate and train is great for accessibility of the technology and to reduce the environment impacts of this (so far) very wasteful tech.

There was a Reddit post of a WWII picture of a blown up US artillery piece where the round detonated in the chamber and killed the crew.

I replied something like "Looks like they got a taste of their own medicine".

While it still to this day gives me a chuckle, the reception was rather cold.

Try hosting locally DeepSync R1, for me the results are similar to ChatGPT without needing to send any into on the internet.

LM Studio is a good start.