A fun conversation starter is always "So do you have an internal monologue?"

No thanks. I get some people agreed to this, but I'm going to continue to use .lan, like so many others. If they ever register .lan for public use, there will be a lot of people pissed off.

IMO, the only reason not to assign a top-level domain in the RFC is so that some company can make money on it. The authors were from Cisco and Nominum, a DNS company purchased by Akamai, but that doesnt appear to be the reason why. .home and .homenet were proposed, but this is from the mailing list:

- we cannot be sure that using .home is consistent with the existing (ab)use

- ICANN is in receipt of about a dozen applications for ".home", and some of those applicants no doubt have deeper pockets than the IETF does should they decide to litigate

https://mailarchive.ietf.org/arch/msg/homenet/PWl6CANKKAeeMs1kgBP5YPtiCWg/

So, corporate fear.

I just use openssl"s built in management. I have scripts that set it up and generate a .lan domain, and instructions for adding it to clients. I could make a repo and writeup if you would like?

As the other commenter pointed out, .lan is not officially sanctioned for local use, but it is not used publicly and is a common choice. However you could use whatever you want.

I use a domain, but for homelab I eventually switched to my own internal CA.

Instead of having to do service.domain.tld it's nice to do service.lan.

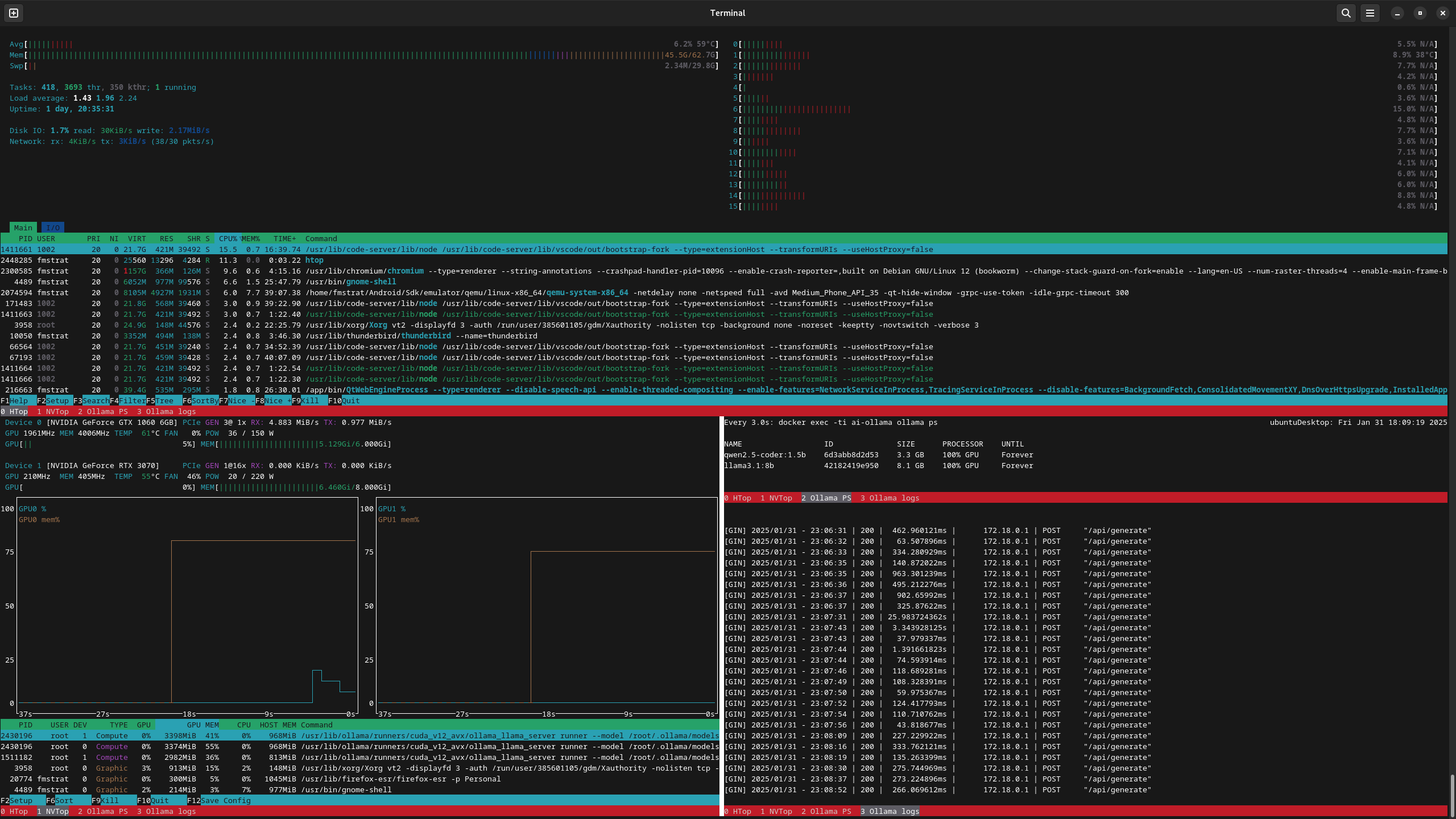

Yea no clue what this is. No context, can't reqd what was attached because it's an image. Waste of a post.

Agreed, and unfortunately articles like this are food for CEOs to do more under the guise of AI. "See, it works!"

Wouldn't it be more efficient to put this on Codeberg and accept PRs?

I'm still running Qwen32b-coder on a Mac mini. Works great, a little slow, but fine.

Yea I just hit 2k hours. I don't play a ton but have been playing forever and am now hearing Rematch may be a good secondary.

Were you a Rocket League player by chance?

I just validated that the latest version of the LDAP privilege escalation issue is not an issue anymore. The curl script is in the ticket.

This was the one where a standard user could get plugin credentials, such as the LDAP bind user, and change the LDAP endpoint. I.E., bad.

I chose this one because after going through all of them, it was the only one that allowed access to something that wasn't just data in Jellyfin.

So for me, security is less of an issue knowing that, as only family use the service, and the remaining issues all require a logged in user (hit admin endpoint with user token).

Plus, I tried a few of those and they were also fixed, just not documented yet. I didn't add to those tickets because I was not as formal with my testing.

Everyone is waiting for this. There needs to be a party.