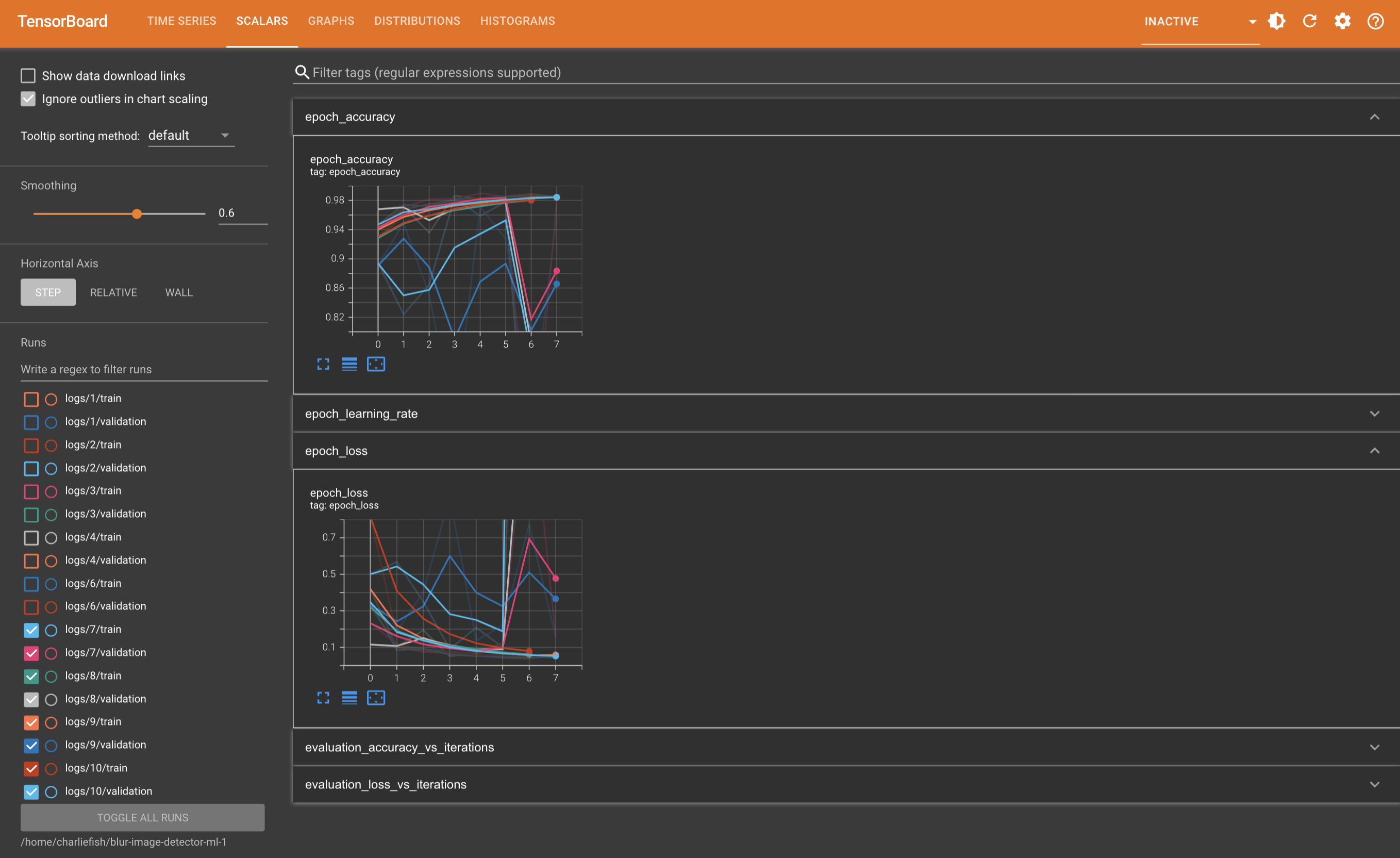

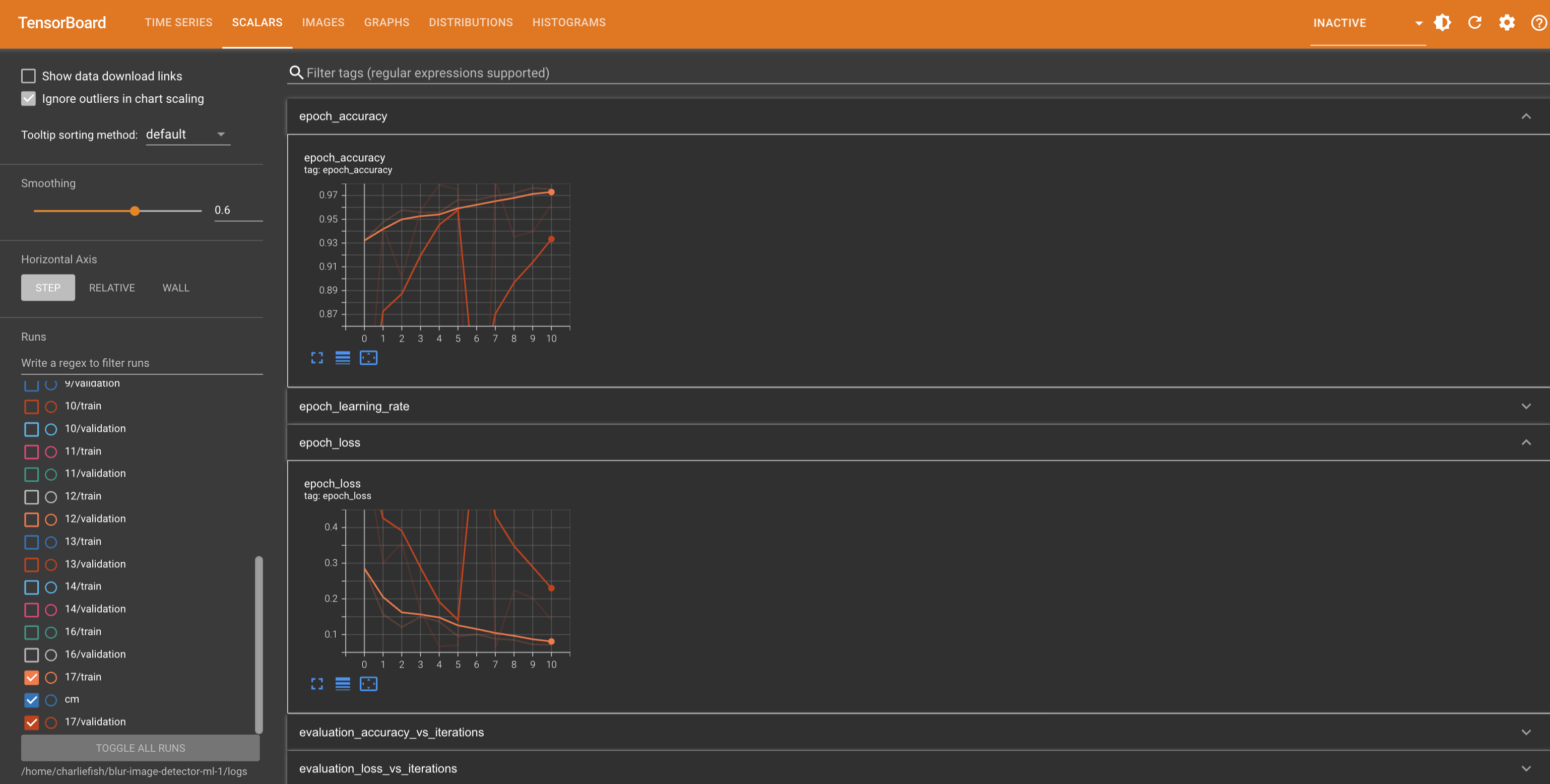

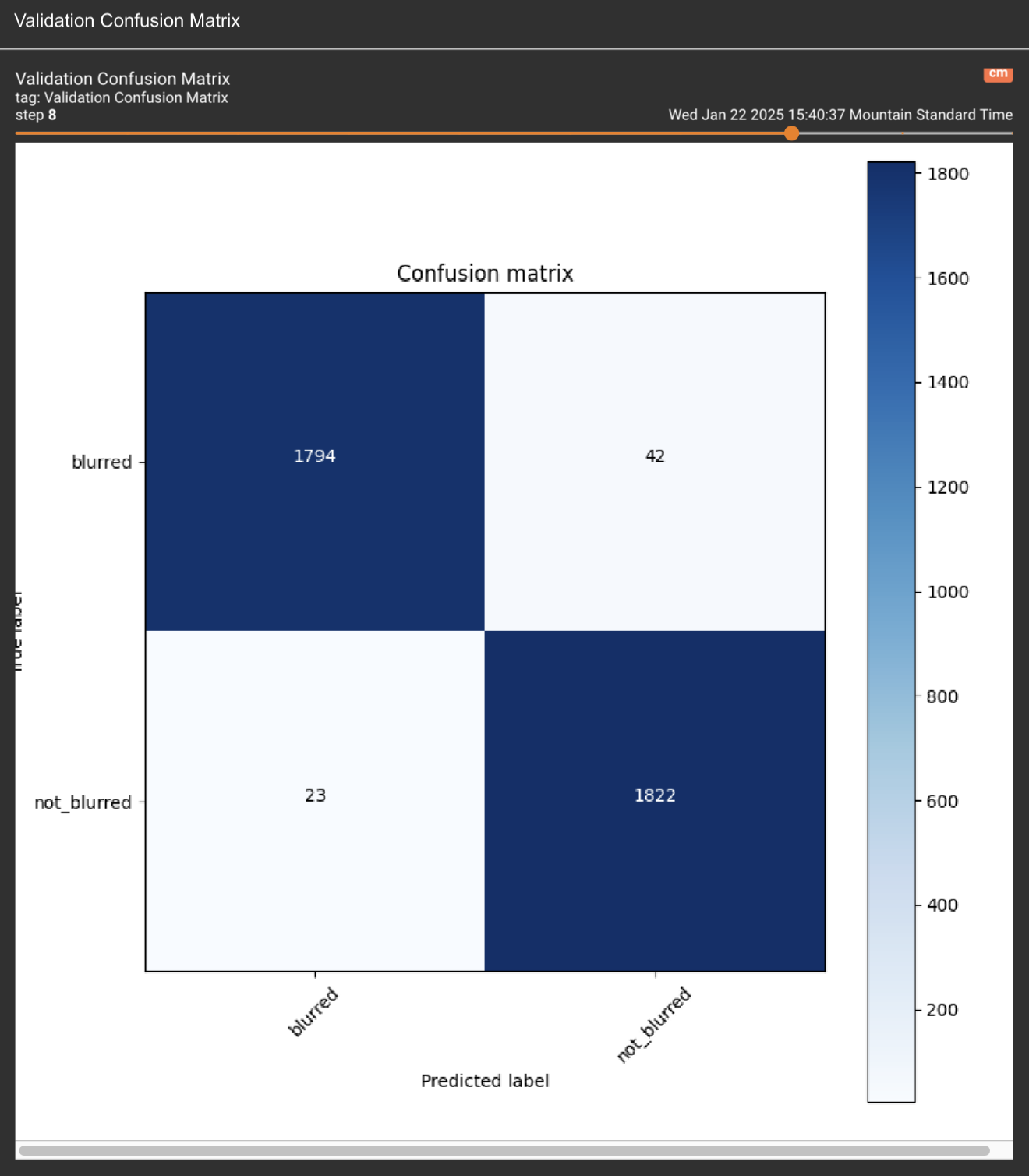

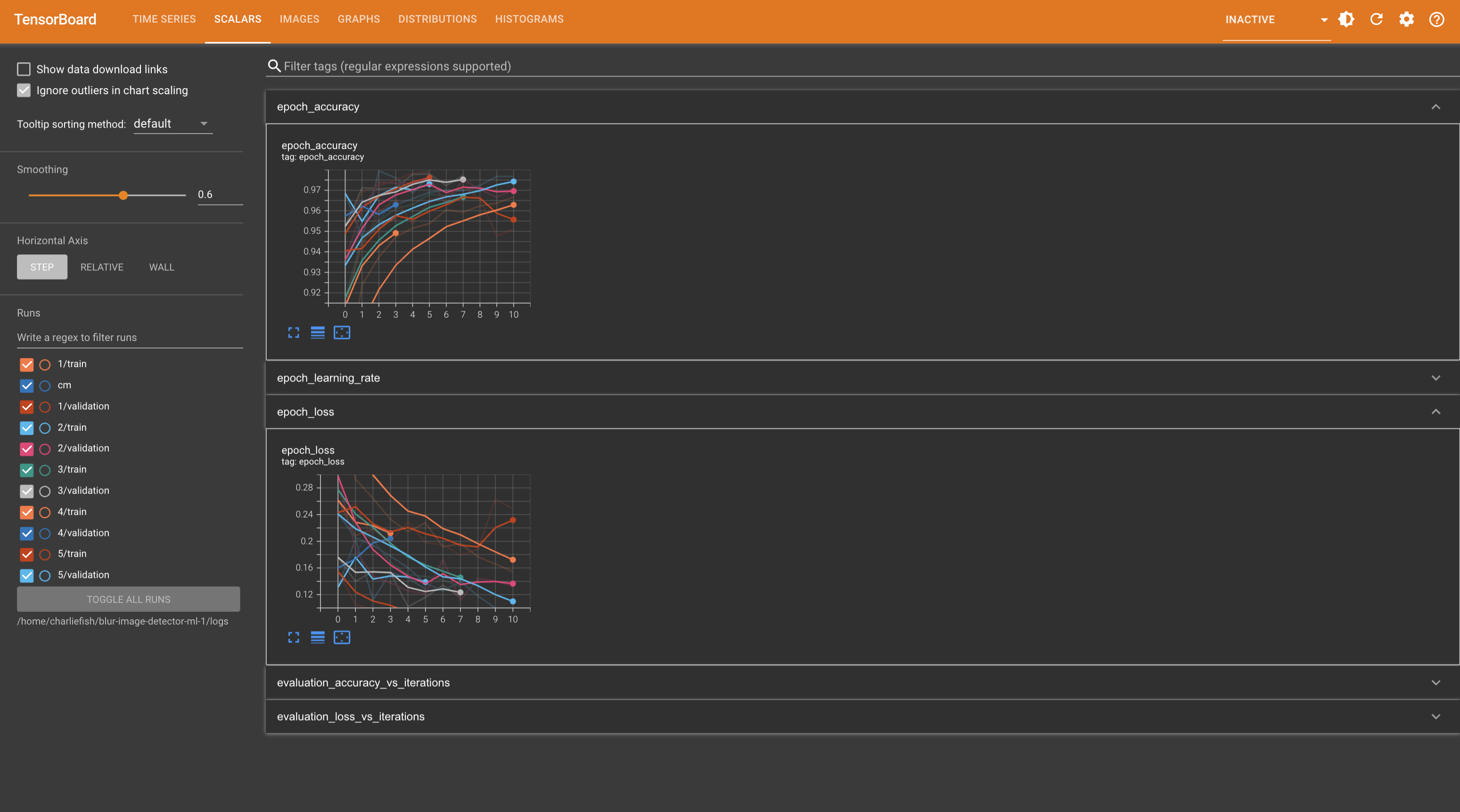

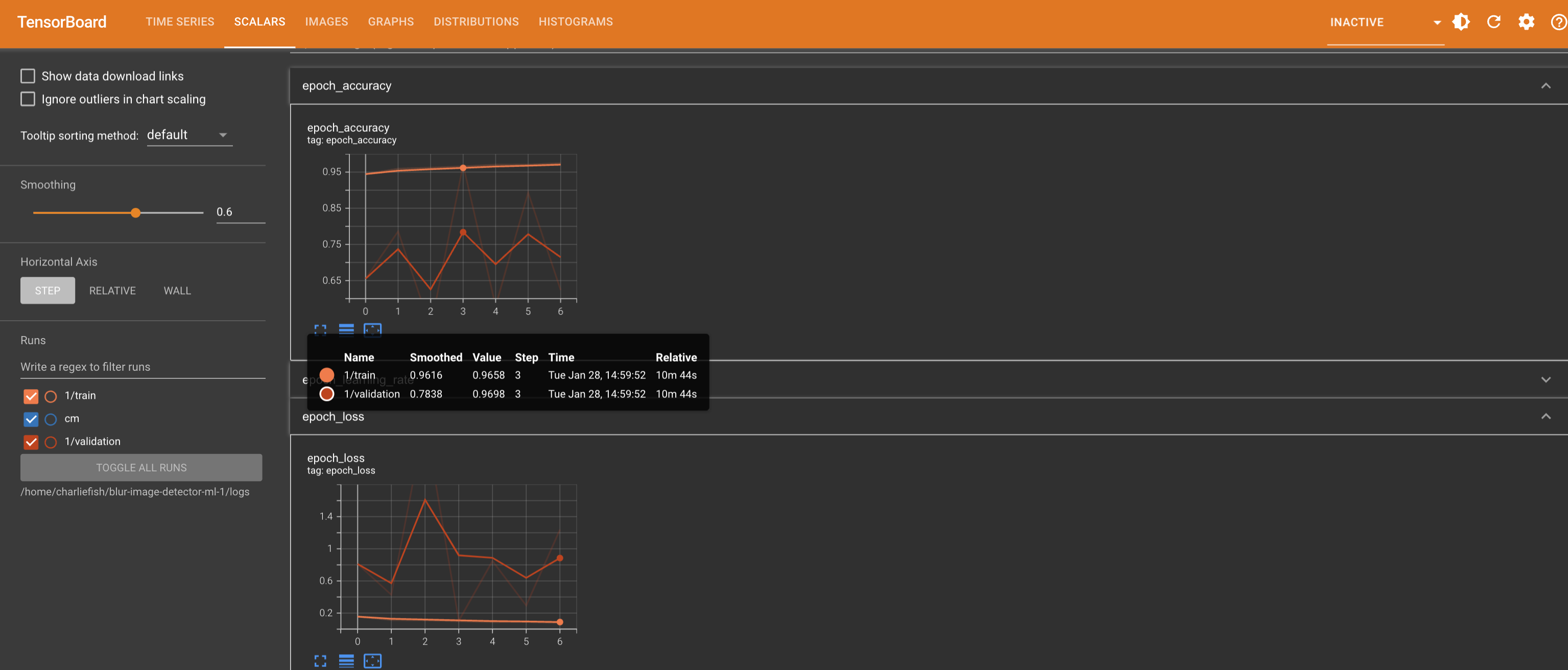

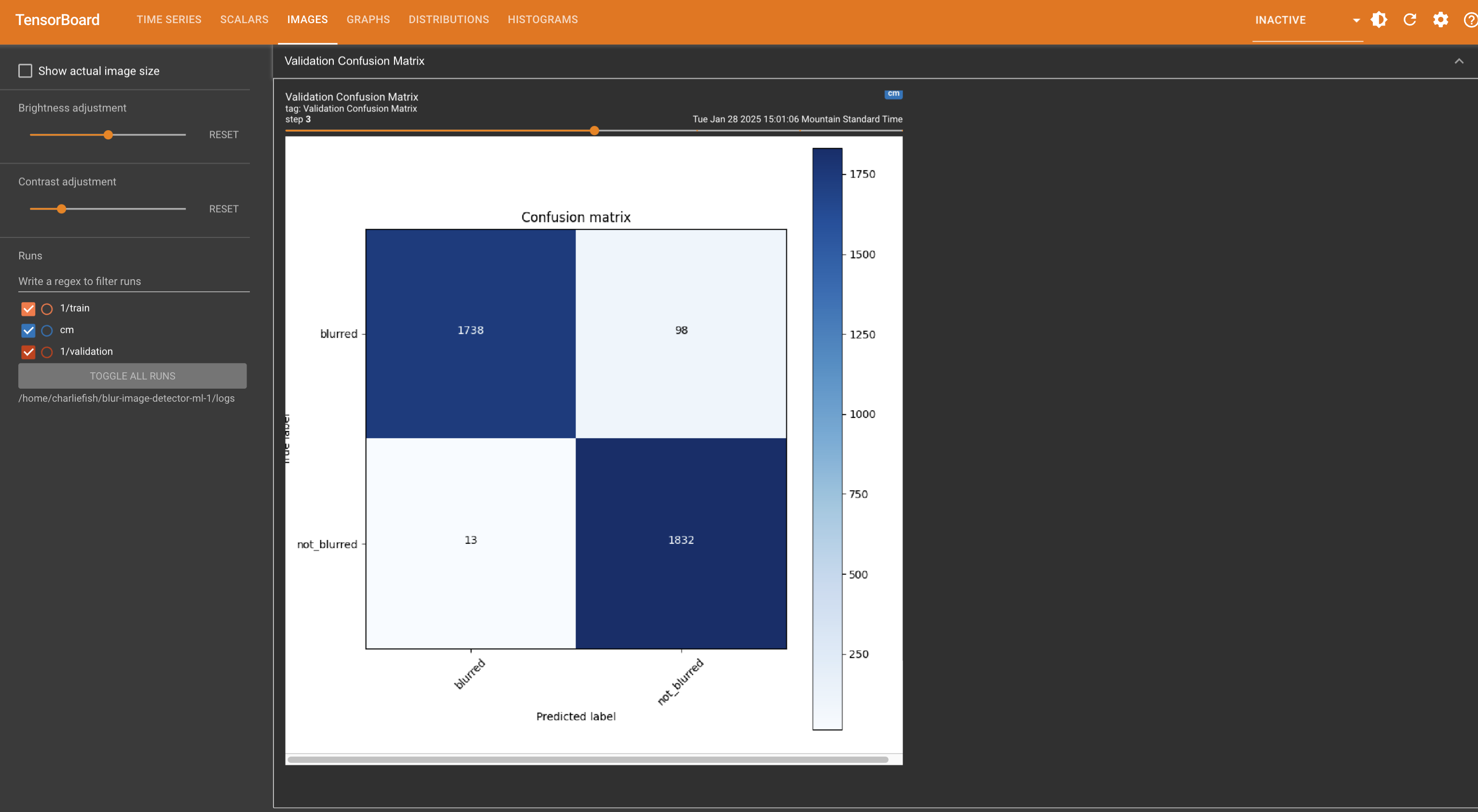

I feel like 98+ % is pretty good. I’m not an expert but I know over-fitting is something to watch out for.

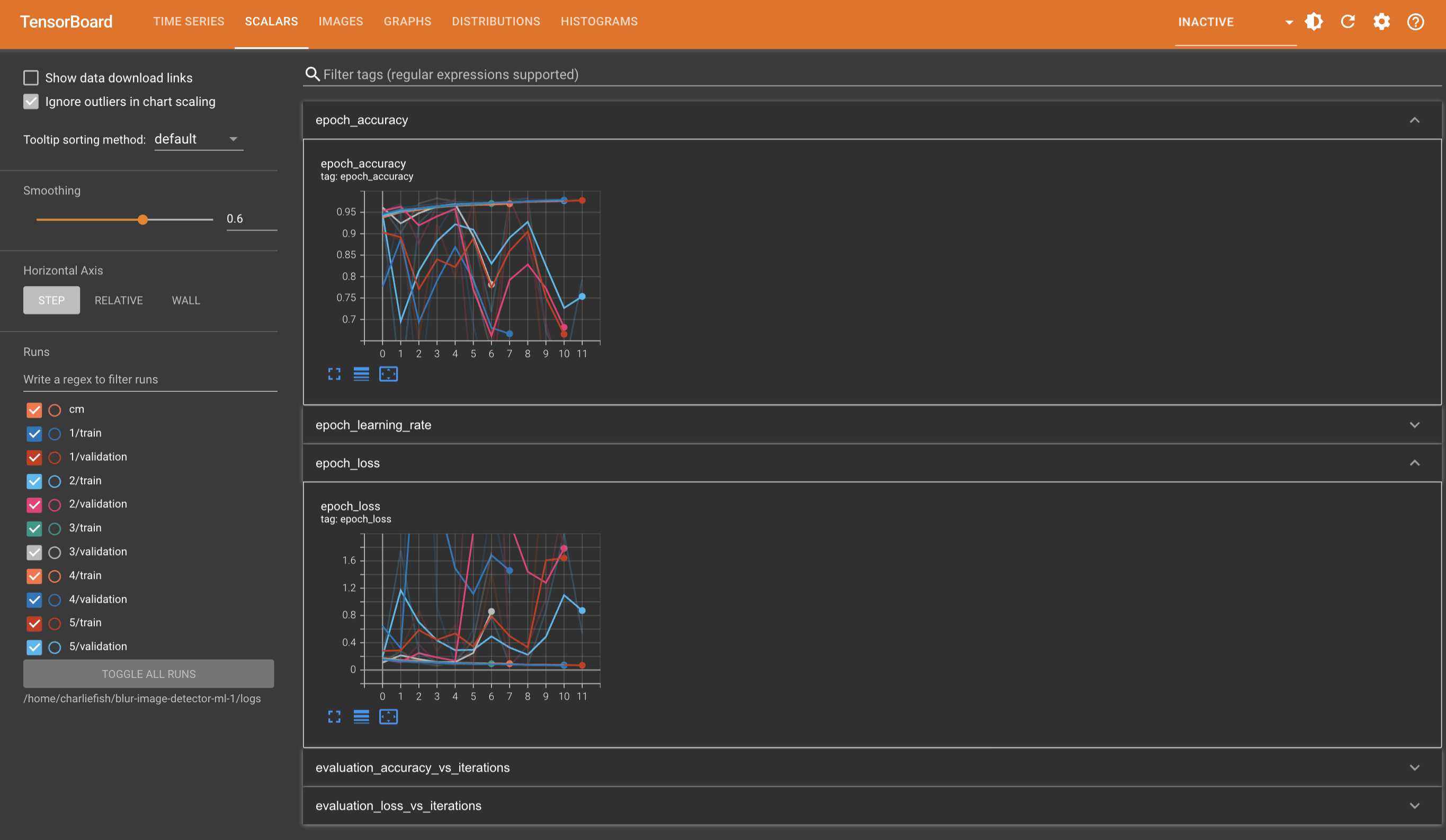

As for inconsistency- man I’m not sure. You’d think it’d be 1 to 1 wouldn’t you with the same dataset. Perhaps the order of the input files isn’t the same between runs?

I know that when training you get diminishing returns on optimization and that there are MANY factors that affect performance and accuracy which can be really hard to guess.

I did some ML optimization tutorials a while back and you can iterate through algorithms and net sizes and graph the results to empirically find the optimal combinations for your data set. Then when you think you have it locked, you run your full training set with your dialed in parameters.

Keep us updated if you figure something out!