this post was submitted on 23 Feb 2025

318 points (96.8% liked)

Science Memes

14525 readers

1992 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

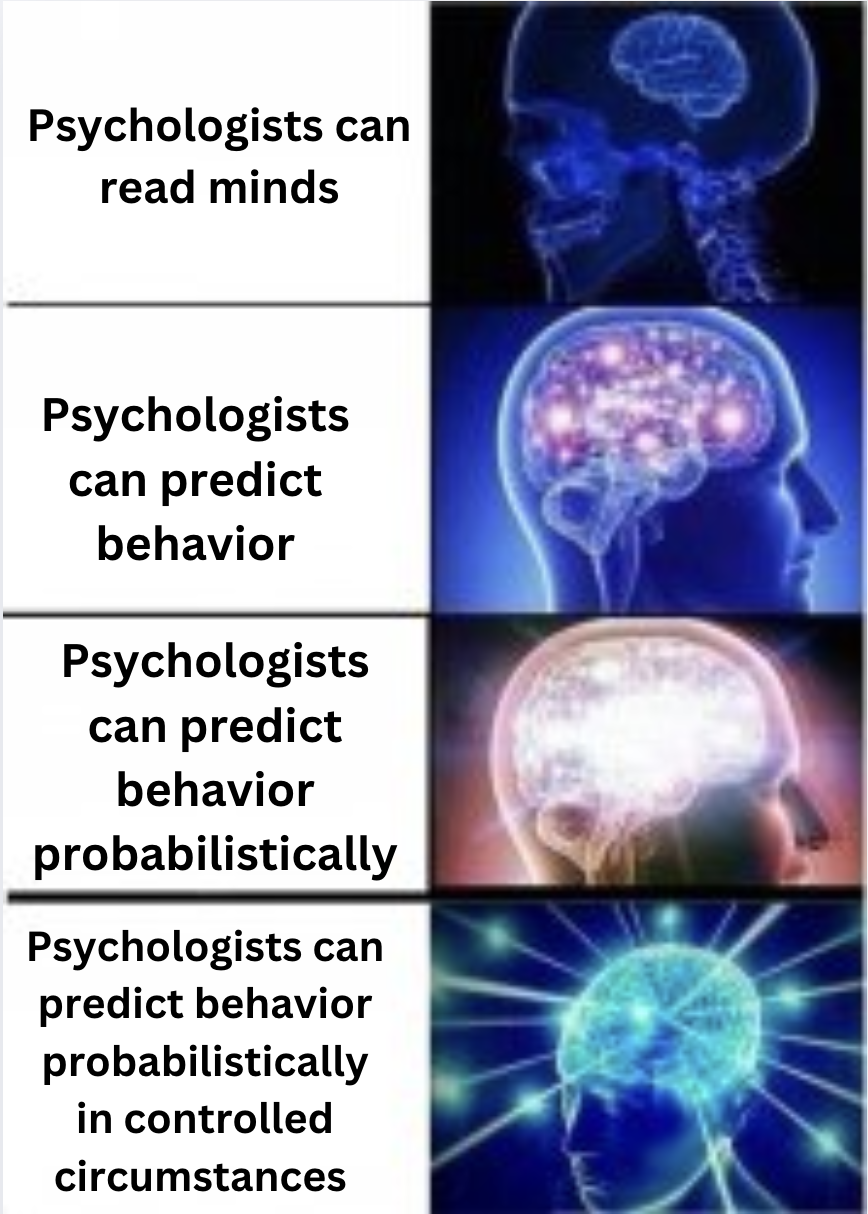

The standard p value in most psych research is 0.05, which means that you are willing to accept a 1/20 risk of a Type 1 error - that you are content with a 5% chance of a false positives, that your results were entirely due to random chance.

Keep in mind that you don’t publish research that doesn’t give results most of the time. Keep in mind that you have to publish regularly to keep your job. (And that if your results make certain people happy, they’ll give you and your university more money). Keep in mind that it is super fucking easy to say “hey, optional extra credit - participate in my survey” to your 300 student Intro Psych class (usually you just have to provide an alternative assignment for ethical reasons).

That wouldn't be a problem at all if we had better science journalism. Every psychologist knows that "a study showed" means nothing. Consensus over several repeated studies is how we approximate the truth.

The psychological methodology is absolutely fine as long as you know it's limitations and how to correctly apply it. In my experience that's not a problem within the field, but since a lot of people think psychology = common sense, and most people think they excel at that, a lot of laypeople overconfidently interpret scientific resultst which leads a ton of errors.

The replication crisis is mainly a problem of our publications (the journals, how impact factors are calculated, how peer review is done) and the economic reality of academia (namely how your livelihood depends on the publications!), not the methodology. The methods would be perfectly usable for valid replication studies - including falsification of bs results that are currently published en masse in pop science magazines.