SafetyCore Placeholder so if it ever tries to reinstall itself it will fail due to signature mismatch.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

I struggle with GitHub sometimes. It says to download the apk but I don't see it in the file list. Anyone care to point me in the right direction?

There's an app called obtainium that let's you link the main page of github apps and manages both the download, the instalation and the updates of those apps.

Great if you want the latest software directly from the source.

Google says that SafetyCore “provides on-device infrastructure for securely and privately performing classification to help users detect unwanted content. Users control SafetyCore, and SafetyCore only classifies specific content when an app requests it through an optionally enabled feature.”

GrapheneOS — an Android security developer — provides some comfort, that SafetyCore “doesn’t provide client-side scanning used to report things to Google or anyone else. It provides on-device machine learning models usable by applications to classify content as being spam, scams, malware, etc. This allows apps to check content locally without sharing it with a service and mark it with warnings for users.”

But GrapheneOS also points out that “it’s unfortunate that it’s not open source and released as part of the Android Open Source Project and the models also aren’t open let alone open source… We’d have no problem with having local neural network features for users, but they’d have to be open source.” Which gets to transparency again.

The app can be found here: https://play.google.com/store/apps/details?id=com.google.android.safetycore

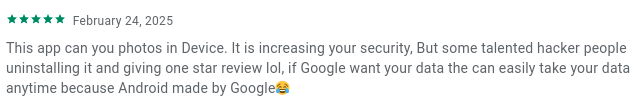

The app reviews are a good read.

Thanks for the link, this is impressive because this really has all the trait of spyware; apparently it installs without asking for permission ?

Yup, heard about it a week or two ago. Found it installed on my Samsung phone, it never asked for permissions or gave any info that it was added to my phone.

Thanks. Uninstalled. Not that it matters, they already got what they wanted from me most likely.

For people who have not read the article:

Forbes states that there is no indication that this app can or will "phone home".

Its stated use is for other apps to scan an image they have access to find out what kind of thing it is (known as "classification"). For example, to find out if the picture you've been sent is a dick-pick so the app can blur it.

My understanding is that, if this is implemented correctly (a big 'if') this can be completely safe.

Apps requesting classification could be limited to only classifying files that they already have access to. Remember that android has a concept of "scoped storage" nowadays that let you restrict folder access. If this is the case, well it's no less safe than not having SafetyCore at all. It just saves you space as companies like Signal, WhatsApp etc. no longer need to train and ship their own machine learning models inside their apps, as it becomes a common library / API any app can use.

It could, of course, if implemented incorrectly, allow apps to snoop without asking for file access. I don't know enough to say.

Besides, you think that Google isn't already scanning for things like CSAM? It's been confirmed to be done on platforms like Google Photos well before SafetyCore was introduced, though I've not seen anything about it being done on devices yet (correct me if I'm wrong).

Forbes states that there is no indication that this app can or will "phone home".

That doesn't mean that it doesn't. If it were open source, we could verify it. As is, it should not be trusted.

Doing the scanning on-device doesn't mean that the findings cannot be reported further. I don't want others going thru my private stuff without asking - not even machine learning.

Per one tech forum this week

Stop spreading misinformation.

To quote the most salient post

The app doesn't provide client-side scanning used to report things to Google or anyone else. It provides on-device machine learning models usable by applications to classify content as being spam, scams, malware, etc. This allows apps to check content locally without sharing it with a service and mark it with warnings for users.

Which is a sorely needed feature to tackle problems like SMS scams

People don't seem to understand the risks presented by normalizing client-side scanning on closed source devices. Think about how image recognition works. It scans image content locally and matches to keywords or tags, describing the person, objects, emotions, and other characteristics. Even the rudimentary open-source model on an immich deployment on a Raspberry Pi can process thousands of images and make all the contents searchable with alarming speed and accuracy.

So once similar image analysis is done on a phone locally, and pre-encryption, it is trivial for Apple or Google to use that for whatever purposes their use terms allow. Forget the iCloud encryption backdoor. The big tech players can already scan content on your device pre-encryption.

And just because someone does a traffic analysis of the process itself (safety core or mediaanalysisd or whatever) and shows it doesn't directly phone home, doesn't mean it is safe. The entire OS is closed source, and it needs only to backchannel small amounts of data in order to fuck you over.

Remember the original justification for clientside scanning from Apple was "detecting CSAM". Well they backed away from that line of thinking but they kept all the client side scanning in iOS and Mac OS. It would be trivial for them to flag many other types of content and furnish that data to governments or third parties.

I didn't have it in my app drawer but once I went to this link, it showed as installed. I un-installed it ASAP.

https://play.google.com/store/apps/details?id=com.google.android.safetycore&hl=en-US

I also reported it as hostile and inappropriate. I am sure Google will do fuck all with that report but I enjoy being petty sometimes

I've just given it the boot from my phone.

It doesn't appear to have been doing anything yet, but whatever.

I switched over to GrapheneOS a couple months ago and couldn't be happier. If you have a Pixel the switch is really easy. The biggest obstacle was exporting my contacts from my google account.

Google says that SafetyCore “provides on-device infrastructure for securely and privately performing classification to help users detect unwanted content

Cheers Google but I'm a capable adult, and able to do this myself.

My question is, does it install as a stand alone app? Or is it part of a Google Play update chunk that you only find out after Play has updated? My system does not auto update (by design) so I'd like to know where it sources from.

Thnx for this, just uninstalled it, google are arseholes

Thanks for bringing this up, first I've heard of it. Not present on my GrapheneOS pixel, present on stock.

I suppose I should encourage pixel owners to switch from stock to graphene, I know which decide I rather spend time using. GrapheneOS one of course.

I just un-installed it

Anyone know what Android System Intelligence does? Should that be un-installed as well?

Even with the latest update from Samsung, I am not seeing this app. My OnePlus did get it with the February update and I had to remove it.

Is there any indication that Apple is truly more secure and privacy conscious over Android? Im kinda tired of Google and their oversteps.

For true privacy you'll want something like GrapheneOS on a Pixel, with no Google apps or anything. Some other ROM with no gApps as a second choice.

Other than that, Apple SEEMS to be mildly better. I'll give you an example: Apple pulls encryption feature from UK over government spying demands

While it's a bad thing that they pull the encryption feature, it's a good sign - they either aren't willing or able to add a backdoor for the UK security services. Then there was this case. If the article is to be believed, they started working on security as of iOS 8 so they could no longer comply with government requests. Today we're on iOS 18.

Apple claims their advertising ID is anonymized so third party apps don't know who you are. That said, they still have the advertising ID service so Apple themselves do know a whoooooole lot about you - but this is the same with Google.

Then regarding photo scanning - Apple received a LOT of backlash for their proposed photo scanning feature. But it was going to be only on-device scans on photos that were going to be uploaded to iCloud (so disabling iCloud would disable it too) and it was only going to report you if you had a LOT of child pornography on your phone - otherwise it was, supposedly, going to do absolutely nothing about the photos. It wasn't even supposed to be a categorization model, just a "Does this match known CSAM?" filter. Google and Microsoft had already implemented something similar, except they didn't scan your shit on-device.

At the end of the day, Apple might be a bit more private, but it's a wash. It's not transparent and neither is Google. I like using their devices. Sometimes I miss the freedom of custom ROMs, but my damn banking apps stopped working on Lineage and I couldn't be arsed to start using the banks' mobile websites again like I'd done in the past. So I moved to iOS, as Oneplus had completely botched their Android experience in the meantime while I'd been using Lineage so I was kinda pissed at what I had considered one of the last remaining decent Android manufacturers (Sonys are overpriced and I will never own a Samsung, I hate them, I didn't like my Huawei or Xiaomi much either).

So if you want to run custom ROMs, get a Pixel or something. If not, Apple is as good a choice as Android. A couple of years ago it was the better choice even, as you'd get longer software support, but now the others have started catching up due to all the consumer outrage.

The short answer is: Apple collects much of the same data as any other modern tech composite, but their "walled garden" strategy means that for the most part only THEY have access to that info.

It's technically lower risk since fewer parties have access to the data, but philosophically just about equally as bad because they aren't doing this out of any real love for privacy (despite what their marketing department might claim)